The observable radial expansion around us involves matter having observable outward speeds ranging from zero toward light velocity c with spacetime of 15 billion years toward zero (i.e., time past of zero toward 15 billion years). This gives an outward force in observable spacetime of

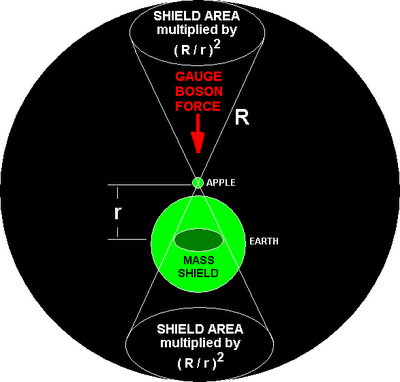

F = ma = m.dv/dt = m(c - 0) / (age of universe) = mc/t ~ mcH = 7 x 1043 Newtons.Newton’s 3rd law tells us there is an equal inward force, which according to the possibilities implied by the Standard Model, is carried by exchange radiation (gauge bosons), which predicts gravity constant G to within 1.65 %: F = ¾ mM H2/( p r r2 e3) ~ 6.7 x 10-11 mM/ r2 Newtons correct within 2 % for consistent interdependent values of the Hubble constant and density. A fundamental particle of mass M has a cross-sectional space pressure shielding area of p (2GM/c2)2. For two separate rigorous and full accurate treatments see Proof.

A simplified version of the gravity treatment so that everyone can understand some of the elementary physics points:

Gravity is not due to a surface compression but instead is mediated through the void between fundamental particles in atoms by exchange radiation which does not recognise macroscopic surfaces, but only interacts with the subnuclear particles associated with the elementary units of mass. The radial contraction of the earth's radius by gravity, as predicted by general relativity, is 1.5 mm. [This contraction of distance hasn't been measured directly, but the corresponding contraction or rather 'dilation' of time has been accurately measured by atomic clocks which have been carried to various altitudes (where gravity is weaker) in aircraft. Spacetime tells us that where distance is contracted, so is time.]

This contraction is not caused by a material pressure carried through the atoms of the earth, but is instead due to the gravity-causing Yang-Mills exchange radiation which travels through the void (nearly 100% of atomic volume is void). Hence the contraction is independent of the chemical nature of the earth. (Similarly, the contraction of moving bodies is caused by the same exchange radiation effect, and so is independent of the material's composition.)

Proof (by two different calculations compared side by side). I’ve received nothing but ignorant pseudo personal abuse from string theorists. An early version on CERN can’t be updated via arXiv apparently because arXiv is controlled in the relevant section by maintream string theorist.

Newton never expressed a gravity formula with the constant G because he didn't know what the constant was (that was measured by Cavendish much later). Newton did have empirical evidence, however, for the inverse square law. He knew the earth has a radius of 4000 miles and the moon is a quarter of a million miles away, hence by inverse-square law, gravity should be (4000/250,000)2 = 3900 times weaker at the moon than the 32 ft/s/s at earth's surface. Hence the gravity acceleration due to the earth's mass at the moon is 32/3900 = 0.008 ft/s/s.

Newton's formula for the centripetal acceleration of the moon is: a = v2 /(distance to moon), where v is the moon's orbital velocity, v = 2p .[250,000 miles]/[27 days] ~ 0.67 mile/second), hence a = 0.0096 ft/s/s.

So Newton had evidence that the gravity from the earth at moon's radius is approximately the same (0.008 ft/s/s ~ 0.0096 ft/s/s) as the centripetal force for the moon. The gravity law we have proved from experimental facts is a complete mechanism that predicts gravity and the contraction of general relativity.Illustration above: exchange force (gauge boson) radiation force cancels out (although there is compression equal to the contraction predicted by general relativity) in symmetrical situations outside the cone area since the force sideways is the same in each direction and so cancels out unless there is a shielding-mass intevening, like the Earth below you. Shielding is caused simply by the fact that nearby matter is not significantly receding, whereas distant matter is significant receding (and hence it fires a recoil towards you in a net gauge boson exchange force, unlike a nearby mass). Gravity is the net force introduced where a mass shadows you, namely in the double-cone areas shown above. In all other directions the symmetry cancels out and produces no net force. Hence gravity can be quantitatively predicted using only well established facts of quantum field theory, recession, etc. The prediction compares well with reality but is banned by string theorists like Lubos Motl who say everyone who points out errors in speculative string theory, and everybody who proves the correct explanation, should be hated.

REDSHIFT DUE TO RECESSION OF DISTANT STARS

From: "Nigel Cook" <nigelbryancook@hotmail.com>

To: "David Tombe" <sirius184@hotmail.com>; <epola@tiscali.co.uk>; <jvospost2@yahoo.com>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>

Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Wednesday, August 02, 2006 2:29 PM

Subject: Re: Bosons -The Defining Question

Because time past that light was emitted = distance of source of light / c.

----- Original Message -----

From: "David Tombe" <sirius184@hotmail.com>

To: <nigelbryancook@hotmail.com>; <epola@tiscali.co.uk>; <jvospost2@yahoo.com>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>

Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Tuesday, August 01, 2006 4:09 PMSubject: Re: Bosons -The Defining Question

> Dear Nigel,

> I copied this line from your explanation below.

> "F = ma = mv/t = mv/(x/c) = mcv/x = 0"

>> How did you manage to slip the speed of light into Newton's > second law of motion?>> Yours sincerely

> David Tombe

From: "David Tombe" <sirius184@hotmail.com>

To: <jvospost2@yahoo.com>; <nigelbryancook@hotmail.com>; <epola@tiscali.co.uk>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>

Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Tuesday, August 01, 2006 7:51 PM

Subject: RE: Simple Survey Yields Cosmic Conundrum

Dear Jonathan,

I've always been extremely cautious about believing modern theories in cosmology. There are so many unknown variables and factors out there in that great expanse, that it is far too presumptious to say anything with any degree of certainty.

Hence I would never base a physics theory on cosmological evidence. I work from the ground up.

Yours sincerely

David Tombe

Comments by Nigel:

The gauge boson radiation to give rise to smooth deflection of light by gravity (rather than diffuse images of light deflected by gravity which would be the case in the event of particle-particle scattering such as photon-graviton scattering) indicates that the ultimate force field mehanism is continuum not particles, but this continuum can be composed of particles somewhat as water waves are composed of particulate water molecules. There is no contradiction since large numbers of particles can cooperate to allow waves to propagate (although the aether mechanism Maxwell proposed for light is wrong in important details). The recession of matter in the universe around us - which is established fact because there is no experimentally known mechanism for anything other than recession to give the uniformly redshifted spectra, and the recession redshift spectra is fact - implies a variation in velocity in proportion to time past (which is equivalent to distance in spacetime), ie an acceleration. This acceleration away from us tells us that the outward force of the big bang is F=ma = ~10^43 Newtons. By Newton's third law there is equal inward force, 10^43 Newtons pressing inward. The actual inward pressure (force/area) is immense and varies as the inverse square law, because area of a sphere is 4 Pi times square of radius. This inward pressure gives gravity because mass shields you. Each electron or quark has a shielding area of A = Pi(2GM/c^2)^2 square metres, where M is the mass of the particle and the part inside brackets is the event horizon radius of a black hole.

This is (1) validated by Catt's experimental finding that trapped light speed energy current = static electric charge, and (2) it is proved by a separate calculation which does not require shielding area. The second calculation (shown side-by-side with the first on my home page) is purely hydrodynamic, i.e., another approach treating the exchange radiation as a perfect fluid and yielding the same physical calculation for this model:

Walk down a corridor at velocity v, your volume is x litres where x = (your mass, kg)/(your density, ~ 1 kg/m^3) ~ 70 litres. When you get to the far end of the corridor, you notice that a volume of 70 litres of air has been moving at velocity -v (ie in the opposite direction to your walking), filling in the volume you have been displacing. You don't find that air pressure has increased where you are, you find the air has been flowing around you and filling in the "void" you would otherwise create in your wake. The same effect can be used to predict gravity. The outward spherically symmetric, radial recession of mass of the universe with speeds increasing with observable distance/time past, creates an inward "in fill". Because of the acceleration in space time of a = c/t = Hc where H is Hubble parameter in reciprocal seconds, the net inward push is not like the air flow back while walking at constant speed, but an acceleration. If you run down the corridor with acceleration a, the air will tend to accelerate at -a (the other way). The spacetime fabric known in general relativity is a perfect, frictionless fluid, so this works: http://feynman137.tripod.com/ (introduction to physical theory near top of page). Mathematical proof: http://feynman137.tripod.com/#h Where partially shielded by mass, the inward pressure causes gravity. Apples are pushed downwards towards the earth, a shield: '... the source of the gravitational field [gauge boson radiation] can be taken to be a perfect fluid.. A fluid is a continuum that 'flows'... A perfect fluid is defined as one in which all antislipping forces are zero, and the only force between neighboring fluid elements is pressure.' - Bernard Schutz, General Relativity, Cambridge University Press, 1986, pp. 89-90.

From: "Nigel Cook" <nigelbryancook@hotmail.com>

To: "Guy Grantham" <epola@tiscali.co.uk>; "David Tombe" <sirius184@hotmail.com>; <jvospost2@yahoo.com>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>

Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Tuesday, August 01, 2006 9:54 AM

Subject: Re: Bosons -The Defining Question

Dear Guy,

The universe's expansion is powered by the momentum being delivered to masses by gauge boson exchange radiation. Think of a normal balloon inflated with air and some fine dust suspended in the air under pressure. Now imagine you remove the constraining balloon skin. The dust expands because air molecules hit one dust particles and the recoil momentum causes expansion. In the case of the universe, the role of the air molecules is done by gauge boson radiation, which is only similar to air molecules insofar as providing momentum.

The anisotropy in the CBR is dealt with on my hole page. There are several causes. The earth's motion causes the largest anistropy, +/-0.13 % in different areas of the sky (in the direction of our absolute motion the 2.726K temperature is enhanced by 0.003 K; the other way it is reduced by 0.003 K, at angles inbetween it varies as the cosine of the angle).

The much smaller ripples in the CBR, supposed to indicate the seeding existing at 300,000 years after BB for the growth of the earliest galaxies (quasars etc) are to small to seed galaxies according to simple BB ideas. The galaxy mechanism formulae I explain why the ripples were so weak at 300,000 years but remain consistent with galaxy formation, see list at http://feynman137.tripod.com/#d : gravity constant G (and electromagnetism force strength, etc) increases with time after BB (fusion rate in BB and star evolution remain the same because they depend on strong nuclear force induced capture of nucleons which occurs when gravitational compression exceeds Coulomb electromagnetic repulsion between charges; increase or decrease BOTH gravity and electromagnetism by the same factor, and the fusion rate is UNALTERED because the effects of the two inverse square law forces on fusion rate offset one another). Because G increases linearly with time, G at the CBR emission time (300,000 years) was 50,000 times weaker than it is now. This explains why the ripples were correspondingly smaller than expected from constant-G galaxy formation models, when data came in from the COBE satellite in 1992. As G increased after 300,000 years, galaxy formation accelerated correspondingly. At 1,000,000,000 years when powerful early galaxies like quasars were being formed, G was over 3,000 times stronger than at the time of CBR emission, so the discrepancy disappears. All discrepancies in the mainstream BB model disappear under the gravity mechanism.

There is no [recession caused] redshift of the sun. We are bound to it by gravity. It is 8 light minutes away from us. There is no significant net redshift even for stars 100 light years away! Andromeda, our nearest galaxy, is BLUESHIFTED, not redshifted (in fact since it is bigger than the Milky Way, this is mainly because we are going towards Andromeda, not Andromeda going towards us; notice how special relativity breaks down when you have gravity as the major intermediating force in a very old universe, because gravity allows you to determine which object is moving most - namely the less massive object).You have to look to CLUSTERS OF GALAXIES before you see the Hubble law appear. Nearby objects are not receding significantly, or there is a lot of "noise" in the data due to local gravity effects.

Kind regards,nigel

----- Original Message -----

From: "Guy Grantham" <epola@tiscali.co.uk>

To: "Nigel Cook" <nigelbryancook@hotmail.com>; "David Tombe" <sirius184@hotmail.com>; <jvospost2@yahoo.com>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Monday, July 31, 2006 11:30 PM

Subject: Re: Bosons -The Defining Question

Dear Nigel

What comment do you have on the redshift anisotropy and general inaccuracy of Hubble redshift? What comment, and why that result, on the measure of redshift from here to the Sun?

Best regards,

Guy.

----- Original Message -----

> From: "Nigel Cook" <nigelbryancook@hotmail.com

To: "David Tombe" <sirius184@hotmail.com>; <epola@tiscali.co.uk>; > <jvospost2@yahoo.com>; <imontgomery@atlasmeasurement.com.au>; > <Monitek@aol.com>> Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; > <graham@megaquebec.net>; <andrewpost@gmail.com>; > <george.hockney@jpl.nasa.gov>; <tom@tomspace.com

Sent: Monday, July 31, 2006 6:34 PM

Subject: Re: Bosons -The Defining Question

>>>> Dear David,

>>>> " There could be any number of explanations to explain red shift." - David

... PROOF: >> http://www.astro.ucla.edu/~wright/tiredlit.htm. There NO other explanations of redshift that work. They are all ... LIES. Facts are that redshift is proved to occur to light due to motion of the source away from you (recession) and all alternatives are UNPROVED speculation.

Court case:

Barrister David: "There could be any number of explanations why the dagger was found in the defendants hand immediately after the murder. He could have just noticed the murder and picked up the dagger to see how well it would fit in his hand. Or a wicked fairy may have handed the knife to the defendant to ensnare him. There is no evidence that the >> defendant is guilty just because he was caught red-handed."

Kind regards,

nigel

From: "Nigel Cook" <nigelbryancook@hotmail.com>

To: "Guy Grantham" <epola@tiscali.co.uk>; "David Tombe" <sirius184@hotmail.com>; <jvospost2@yahoo.com>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>

Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Wednesday, August 02, 2006 2:40 PM

Subject: Re: Bosons -The Defining Question

Guy, gravitational redshift can be calculated and does not work [to explain Hubble law]. Red filtering or scattering doesn't work because different frequencies of light would be redshifted by different amounts. Redshift as observed precisely involves the spectral lines all being shifted by the same factor, regardless of the frequency of the spectral line.

Put another way, the best black body Planck radiation spectrum every observed is not a laboratory measurement but is the cosmic background spectrum first accurately plotted by the COBE satellite in 1992. That spectrum has an effective temperature of 2.72 K, and was emitted when the universe was 300,000 years old and at a temperature of 3,500 K. The redshift has uniformly shifted all frequencies by a factor of over 1000, which is the most severe redshift we can ever hope to observe. If redshift was due to any other cause than recession (which is proved to cause redshift correctly as observed, you can detect the redshift of light emitted from a moving object), then you would get scattering effects where different parts of the spectrum are redshifted by different amounts - see the Raleigh scattering formula, etc. See also http://www.astro.ucla.edu/~wright/tiredlit.htm for a disproof of more complex speculations.

nigel

----- Original Message -----

From: "Guy Grantham" <epola@tiscali.co.uk

To: "Nigel Cook" <nigelbryancook@hotmail.com>; "David Tombe" <sirius184@hotmail.com>; <jvospost2@yahoo.com>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>Sent: Tuesday, August 01, 2006 1:01 PMSubject: Re: Bosons -The Defining Question

Dear Nigel

That's OK then. I was rising to your (adamant) comment "There NO other explanations of redshift that work." You have put the Gravitational /Einstein redshifts (eg due to the Sun) into another box, and acknowledge that there are net blueshifts on the > smaller scale whilst qualifying Hubble redshift only to the Astronomical very-grand scale . Do you acknowledge also the non-linear absorptions in space of energy from > em radiation causing redshifts in freq.? Best regards, Guy

From: "Nigel Cook" <nigelbryancook@hotmail.com>

To: "jonathan post" <jvospost2@yahoo.com>; "David Tombe" <sirius184@hotmail.com>; <epola@tiscali.co.uk>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>

Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Wednesday, August 02, 2006 2:46 PM

Subject: Re: "Cosmologists work from the ground up" and "It's like this, you see..."

Seeing that the most accurate black body radiation ever observed in history is the cosmic background radiation, measured by the COBE satellite in 1992, David should beware that if he doesn't take the most accurate knowledge we have as his foundation, he has problems:

Ancestor of David in 1500: "Copernicus [builds] his theory on astronomy which is suspect. I build from the ground up."

Ancestor of David in 1687: "Newton [builds] his theory on astronomy which is suspect. I build from the ground up."

I don't think it is helpful to throw out the whole of the data from modern physics or from astronomy just because the current theories being used by the mainstream (Lambda-CDM and string theory) look as if they are dark energy, dark matter metaphysics which have been tied together with very short bits of string.

Cut the strings out and forget the speculative ad hoc dark fixes, and work on the empirical FACTS and you find they can make useful predictions

Kind regards,

nigel

----- Original Message -----

From: "jonathan post" <jvospost2@yahoo.com

To: "David Tombe" <sirius184@hotmail.com>; <nigelbryancook@hotmail.com>; <epola@tiscali.co.uk>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Tuesday, August 01, 2006 9:45 PM

Subject: "Cosmologists work from the ground up" and "It's like this, you see..."

Dear David,

"I work from the ground up" sounds like the punchline of a joke about Cosmologists. Maybe of cosmologists who drive cars with bumper stickers reading "astronomers do it in the dark."

=================================

It's like this, you see

The ability to think metaphorically isn't reserved for poets. Scientists do it, too, using everyday analogies to expand their understanding of the physical world and share their knowledge with peers

Jul. 30, 2006. 01:00 AM

SIOBHAN ROBERTS SPECIAL TO THE [TORONTO] STAR:

The poet Jan Zwicky once wrote, "Those who think metaphorically are enabled to think truly because the shape of their thinking echoes the shape of the world." Zwicky, whose day job includes teaching philosophy at the University of Victoria in British Columbia and authoring books of lyric philosophy such as Metaphor & Wisdom, from which the above quotation was taken, has lately directed considerable attention to contemplating the intersection of "Mathematical Analogy and Metaphorical Insight," giving numerous talks on the subject, including one scheduled at the European Graduate School in Switzerland next week. Casual inquiry reveals that metaphor, and its more common cousin analogy, are tools that are just as important to scientists investigating truths of the physical world as they are to poets explaining existential conundrums through verse. A scientist, one might liken, is an empirical poet; and reciprocally, a poet is a scientist of more imaginative and creative hypotheses. Both are seeking "the truth of the matter," says Zwicky. "As a species we are attempting to articulate how our lives go and what our environment is like, and mathematics is one part of that and poetry is another." Analogies, whether in science or poetry, she says, are not arbitrary and meaningless, not merely "airy nothings, loose types of things, fond and idle names." To bolster her thesis, Zwicky cites Austrian ethologist and evolutionary epistemologist Konrad Lorenz: "(Lorenz) has argued that, ok, yeah, we are subject to evolutionary pressure, selection of the fittest, but that means what we perceive about the truth of the world has to be pretty damn close to what> the truth of the world actually is, or the world would have eliminated us. There are selection pressures on our epistemological choices." Analogy appearing in scientific methodology, then, is no accident. It is fundamental to the way scientists think and the way they whittle their thinking down to> truth. Zwicky, not being a mathematician (though she teaches elementary mathematical proofs in her philosophy courses), relies on historical testimony from mathematicians such as Henri Poincaré and Johannes Kepler. "I love analogies most of all, my most reliable masters who know in particular all secrets of nature," Kepler wrote in 1604. "We have to look at them especially in geometry, when, though by means of very absurd designations, they unify infinitely many cases in the middle between two extremes, and place the total essence of a thing splendidly before the eyes." ... According to Kochen, the modern mathematical method is that of axiomatics - rooted abstraction and analogy. Indeed, mathematics has been called "the science of analogy."

"Mathematics is often called abstract," Kochen says. "People usually mean that it's not concrete, it's about abstract objects. But it is abstract in another related way. The whole mathematical method is to abstract from particular situations that might be analogous or similar (to another situation). That is the method of analog." This method originated with the Greeks, with the axiomatic method applied in geometry. It entailed abstracting from situations in the real world, such as> farming, and deriving mathematical principles that were put to use elsewhere. Eratosthenes used geometry to measure the circumference of the Earth in 276 BC, and with impressive accuracy. In the lexicon of cognitive science, this process of transferring knowledge from a known to unknown is called "mapping" from the "source" to the "target." Keith Holyoak, a professor of cognitive psychology at UCLA, has dedicated much of his work to parsing this process. He discussed it in a recent essay, "Analogy," published last year in The Cambridge Handbook of Thinking and Reasoning. "The source," Holyoak says, providing a synopsis, "is what you know already - familiar and well understood. The target is the new thing, the problem you're working on or the new theory you are trying to develop. But the first big step in analogy is actually finding a source that is worth using at all. A lot of our research showed that that is the hard step. The big creative insight is figuring out what is it that's analogous to this problem. Which of course depends on the person actually knowing such a thing, but also> being able to find it in memory when it may not be that obviously related with any kind of superficial features." In an earlier book, Mental Leaps: Analogy in Creative Thought, Holyoak and co-author Paul Thagard, a professor of philosophy and director of the Cognitive Science Program at the University of Waterloo, argued that the cognitive mechanics underlying analogy and abstraction is what sets humans apart from all the other species, even the great apes. They touch upon the use of analogy in politics and law but focus a chapter on the "analogical scientist" and present a list of "greatest hits" science analogies. The ancient Greeks used water waves to suggest the nature of the modern wave theory of sound. A millennia and a half later, the same analogical abstraction yielded the wave theory of light. Charles Darwin formed his evolutionary theory of natural selection by drawing a parallel to the> artificial selection performed by breeders, an analogy he cited in his 1859 classic The Origin of Species. Velcro, invented in 1948 by Georges de Mestral, is an example of technological design based on visual analogy - Mestral recalled how the tiny hooks of burrs stuck to his dog's fur. Velcro later became a "source" for further analogical designs with "targets" in medicine, biology, and chemistry. According to Mental Leaps, these new domains for analogical transfer include abdominal closure in surgery, epidermal structure, molecular bonding, antigen recognition, and hydrogen bonding. Physicists currently find themselves toying with analogies in trying to unravel the puzzle of string theory, which holds promise as a grand unified theory of everything in the universe. Here the tool of> analogy is useful in various contexts - not only in the discovery, development, and evaluation of an idea, but also in the exposition of esoteric hypotheses, in communicating them both among physicists and to the layperson. Brian Greene, a Columbia University professor cum pop-culture physicist, has successfully translated the foreign realm of string theory for the general public with his best-selling book The Elegant Universe (1999) and an accompanying NOVA documentary, both replete with analogies to garden hoses, string symphonies, and sliced loaves of bread. As one profile of Greene observed, "analogies roll off his tongue with the effortless precision of a Michael Jordan lay-up." Yet at a public lecture at the Strings05 conference in Toronto, an audience member politely berated physicists for their bewildering smorgasbord of analogies, asking why the scientists couldn't reach consensus on a few key analogies so as to convey a more coherent and unified message to the public. The answer came as a disappointment. Robbert Dijkgraaf, a mathematical physicist at the University of Amsterdam, bluntly stated that the plethora of analogies is an indication that string theorists themselves are grappling with the mysteries of their work; they are groping in the dark and thus need every glimmering of analogical input they can get. "What makes our field work, particularly in the present climate of not having very much in the way of newer experimental information, is the diversity of analogy, the diversity of thinking," says Leonard Susskind, the Felix Bloch professor of theoretical physics at Stanford, and the discoverer of string theory. "Every really good physicist I know has their own absolutely unique way of thinking," says Susskind. "No two of them think alike. And I would say it's that diversity that makes the whole subject progress. I> have a very idiosyncratic way of thinking. My friend Ed Witten (at Princeton's Institute for Advanced Study) has a very idiosyncratic way of thinking. We think so differently, it's amazing that we can ever interact with each other. We learn how. And one of the ways we learn how is by using analogy." Susskind considers analogy particularly important in the current era because physics is almost going beyond the ken of human intelligence. "Physicists have gone through many generations of rewiring themselves, to learn how to think about things in a way which initially was very counterintuitive and very far beyond what nature wired us for," he says. Physicists compensate for their evolutionary shortcomings, he says, either by learning how to use abstract mathematics or by building analogies. Susskind, for his own part, deploys more of the latter. Analogy is one of his most reliable tools (visual thinking is the other). And Susskind has a few favourites that he always returns to, especially when he is stuck or confused. He thinks of black holes as an infinite lake with boats swirling toward a drain at the bottom, and he envisions the expanding universe as an inflating balloon. However, the real art of analogy, he says, "is not just making them up and using them, but knowing when they're defective, knowing their limitations. All analogies are defective at some level." A balloon eventually pops, for example, whereas a universe does not. At least not yet.

--------------------------------------------------------------------------------

Siobhan Roberts is a Toronto freelance writer and author of "King of Infinite Space: Donald Coxeter, The Man Who Saved Geometry" (Anansi), to be published in October. ======================================

>> --- David Tombe <sirius184@hotmail.com wrote: Dear Jonathan, I've always been extremely cautious about believing modern theories in cosmology. There are so many unknown variables and factors out there in that great expanse, that it is far too presumptious to>say anything with any degree of certainty. Hence I would never base a physics theory on cosmological evidence. I work from the ground up. Yours sincerely David Tombe

From: "Nigel Cook" <nigelbryancook@hotmail.com>

To: "Guy Grantham" <epola@tiscali.co.uk>; "David Tombe" <sirius184@hotmail.com>; <jvospost2@yahoo.com>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>

Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Wednesday, August 02, 2006 7:53 PM

Subject: Re: Bosons -The Defining Question

Dear Guy,

No! Distant light coming towards us from a vast distance would approach the centre of mass in our frame of reference (we are at the centre of mass in our frame of reference because all the mass of the universe appears as if it is uniformly distributed around us in all directions) and hence would would GAIN gravitational potential energy and be BLUESHIFTED. A blue shift corresponds to gaining gravitational potential energy, gravitational redshift would occur to light we send out towards distant masses. In an extremely sensitive experiment by Pound who won a Nobel for it (Woit says somewhere that Pound was his PhD adviser or postdoc adviser or similar), the gravitational redshift of gamma rays was measured by sending them upwards in a tower at his physics department. The result was redshift. If the gamma rays had been sent downwards, they would have been blueshifted.

Hence you are totally wrong. Have you been dowsing again? ;-)

Kind regards,

nigel

----- Original Message -----

From: "Guy Grantham" <epola@tiscali.co.uk>

To: "Nigel Cook" <nigelbryancook@hotmail.com>; "David Tombe" <sirius184@hotmail.com>; <jvospost2@yahoo.com>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Wednesday, August 02, 2006 6:54 PMSubject: Re: Bosons -The Defining Question

Dear Nigel

I take it you mean that the gravitational redshift and non-linear absorptions do not work to explain the cosmological redshift relating to age of universe, for they surely cause redshifts?

Best regards, Guy

From: "Nigel Cook" <nigelbryancook@hotmail.com>

To: "Guy Grantham" <epola@tiscali.co.uk>; "David Tombe" <sirius184@hotmail.com>; <jvospost2@yahoo.com>; <imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>

Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>; <graham@megaquebec.net>; <andrewpost@gmail.com>; <george.hockney@jpl.nasa.gov>; <tom@tomspace.com>

Sent: Thursday, August 03, 2006 9:54 AM

Subject: Re: Bosons -The Defining Question

Dear Guy,

I said gravitational redshift has been measured on Earth using gamma rays byProfessor Pound, and I said gravitational redshift does not work to explain the recession of stars.

Because light is falling in to us, you'd expect if anything that distant starlight would be blueshifted due to gaining gravitational potential energy, rather than redshifted (which occurs when light moves away from a centre of mass, not towards it). However, at immense distances the time the light emitted after BB was small, so the density of the universe was much higher. Newton's proof for a large radially symmetrical spherical system (observed universe being spherically symmetrical, as seen by us along any radial line) is that the net gravity effect is due to the mass enclosed by the sphere of radius equal to your distance from the centre of mass. For example if you are at half the earth's radius, you can calculate gravity by working out the amount of mass which exists in the earth out to half the radius of the earth, and then assuming it is all located in the centre of the earth (contributions to the shells around you all cancel out exactly).

So a ray of light travelling from a very distant supernova is continuously being subject to a falling [diminishing] gravitational field as it approaches is, due to two reasons: (1) the density of the universe is falling as time passes and the universe expands, and (2) the light as it approaches is subject to a net effect equal to the gravity due to the mass enclosed by a sphere around us with radius equal to the distance the light ray is from us. Effect (1), falling density of universe, is severe because the volume of the universe increases as the cube of time since there is no observational gravitational retardation, and density = mass/volume where volume = (4/3)Pi(ct)^3, where ct is the horizon radius, t being time after BB (ignoring Guth's inflation,which I've already said is superfluous under the gravity mechanism, sincethe CBR is smoothed correctly by a totally different mechanism than the ad hoc inflation speculation which Guth gives). Effect (2) is also severe. At immense distances, gravitational blueshift may therefore be at a maximum butwill be still small because of the inverse square law of distance which makes gravity extremely weak, nevermind the issue that gravity in the gravity mechanism falls to zero at the horizon because it is an effect of surrounding expansion. So [after detailed calculations have been done to determine it quantitatively, gravitational] blueshift is trivial.

The amount of gravitational redshift is extremely difficult to measure from the sun with the blackbody light the sun gives off, because it is so small. What you are doing in measuring redshift in a nearby star light or sun light spectrum is ascertaining the frequencies of the lines spectra, then comparing the Balmer etc. series of line spectra to the line spectra measured in the laboratory here on Earth where there is no net effect (light going sideways, not vertically, for instance would eliminate gravity red/blue shift, but it is trivial anyhow which is why gamma rays were usedin the experiment because it is easier to determine their exact frequency asthey have more energy to start with than light photons).

Because of the high temperature and intense magnetic field in the sun, the line spectra are only approximately those determined in the laboratory on Earth. The Balmer equation indicates the relative frequencies of lines fora particular element, but in reality the presence of high temperatures and magnetic fields cause "noise" effects which outweigh gravitational redshift from the sun which is very small. Admittedly the Earth's mass is trivial compared to the Sun's, but with gamma rays going up a tower you have complete control over the temperature and magnetic field those rays are subjected to from the time of emission to the time of being measured, and since we are far closer to the Earth's centre of mass (4000 miles) than to the Sun's (92,000,000 miles), the inverse square law means that gravity acceleration on Earth's surface is relatively high despite Earth having trivial mass compared to the Sun. This is why the redshift can be measured on Earth more easily than from the Sun, although obviously light leaving the Sun's surface is in a stronger gravitational field.

Finally you say; "> If an em photon from the source were to be (totally)absorbed by a filtering> atom or ion it would simply diminish amplitude of the incoming beam andnot> shift its freq, I am not referring to filtering or scattering." - Guy

There is no evidence that this can occur, no evidence that there are atoms or ions in space which will totally absorb and then presumably re-emitradiation at a reduced frequency. This is speculation, like UFOs, Lock Ness Monster, like religious stuff, like string theory, 10 dimensions, 11 dimensions, parallel universes, dowsing, etc. The evidence is that the recession redshift model is experimentally shown to work, and it fits other evidence of BB like abundances of the different light elements in the universe and also and makes predictions, like gravity (although I can't publish that in Classical and Quantum Gravity, despite the kindly editor, because the peer-reviewers are all behaving like arrogant thugs obsessed with string theory or their own pretentious pet theories which predict nothing).

Kind regards,

nigel

----- Original Message -----From: "Guy Grantham" <epola@tiscali.co.uk>To: "Nigel Cook" <nigelbryancook@hotmail.com>; "David Tombe"<sirius184@hotmail.com>; <jvospost2@yahoo.com>;<imontgomery@atlasmeasurement.com.au>; <Monitek@aol.com>Cc: <marinsek@aon.at>; <pwhan@atlasmeasurement.com.au>;<graham@megaquebec.net>; <andrewpost@gmail.com>;<george.hockney@jpl.nasa.gov>; <tom@tomspace.com>Sent: Wednesday, August 02, 2006 10:23 PMSubject: Re: Bosons -The Defining Question> Dear Nigel>> Neither dowsing nor drowsing!>> Yes! I am correct then that you hold they "do not work to explain the> cosmological redshift ">> I referred to the redshift of light leaving the Sun towards us - whichyou> seemed to deny to occur!> ">>>You have put the Gravitational /Einstein redshifts (eg due to the Sun)> [gg]>> Guy, gravitational redshift can be calculated and does notwork.[nbc]> "> I can imagine that intermediate stellar objects would equally blueshiftthen> redshift light passing by no change of original freq.. ( I had read ofthe> experiment to measure redshift of gamma rays sent upwards a few metres -> which proved that gravitational redshift does occur).> Does your measure of perimeter of universe include a correction for the> gravitational blueshift of incoming radiation and would it mean that the> radius of vision is limited to an 'event horizon' of a bigger universe> rather than an expanding-still-visible universe?>> My other point has not been resolved for me. Non-linear absorption of em> radiation over long distance causes a redshift of frequency by partial> energy loss. This effect would be distance related as it is in practicefor> radio waves on Earth. Over cosmological distances all freqs (spectral> lines) would exhibit the same shift by the same probability logic as your> averaging of push/pull virtual bosons.> If an em photon from the source were to be (totally) absorbed by afiltering> atom or ion it would simply diminish amplitude of the incoming beam andnot> shift its freq, I am not referring to filtering or scattering.>> Best regards, Guy