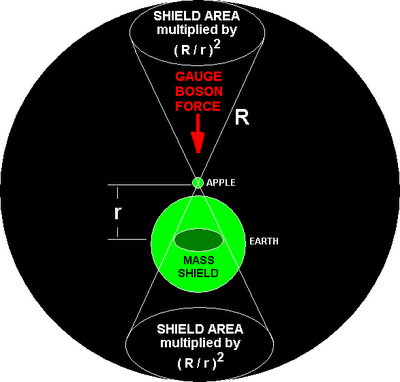

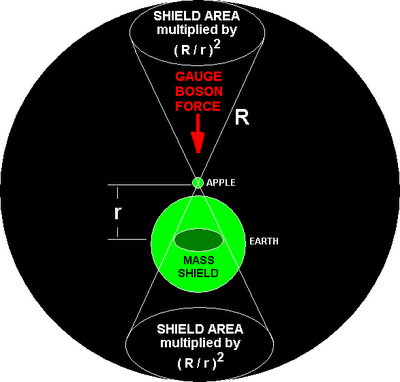

Gauge boson radiation shielding cancels out (although there is compression equal to the contraction predicted by general relativity) in symmetrical situations, but gravity is introduced where a mass shadows you, namely in the double-cone areas shown below. In all other directions the symmetry cancels out and produces no net force. Hence gravity is predicted.

Empirical evidence and proofs of heuristic mechanisms for a simple mechanism of continuum field theory (general relativity and Maxwell’s equations) and quantum field theory, including quantum mechanics

Objective: Using empirical facts, to prove mechanisms that make other predictions, allowing independent verification

General relativity and elementary particlesQuantum mechanics and electromagnetismQuantum field theory and forcesFact based predictions versus observationsBy

Hubble’s empirically defensible and scientifically accurate law, big bang has speed from 0 to c with spacetime [‘The views of space and time which I wish to lay before you have sprung from the soil of experimental physics, and therein lies their strength. They are radical. Henceforth space by itself, and time by itself, are doomed to fade away into mere shadows, and only a kind of union of the two will preserve an independent reality.’ – Hermann Minkowski, 1908] of 0 toward 15 billion years, giving outward force of

F = ma = m.dv/dt = m(c - 0) / (age of universe) = mc/t ~ mcH = 7 x 10

43 Newtons.

Newton’s 3rd law tells us there is an equal inward force, which according to the possibilities implied by the Standard Model, must be carried by gauge bosons, which predicts gravity constant G to within 1.65 %.

Proof.A simplified version of the proof so that everyone with just basic maths and physics can understand it easily; for two separate rigorous and full accurate treatments see

Proof.

1. The radiation is received by mass almost equally from all directions, coming from other masses in the universe. Because the gauge bosons are exchange radiation, the radiation is in effect reflected back the way it came if there is symmetry that prevents the mass from being moved. The result is then a mere compression of the mass by the amount mathematically predicted by general relativity, i.e., the radial contraction is by the small distance

MG/(3

c2) = 1.5 mm for the contraction of the spacetime fabric by the mass in the Earth.

2. If you are near a mass, it creates an asymmetry in the radiation exchange, because the radiation normally received from the distant masses in the universe is red-shifted by high speed recession, but the nearby mass is not receding significantly. By Newton’s 2

nd law the outward force of a nearby mass which is not receding (in spacetime) from you is

F = ma = mv/t = mv/(x/c) = mcv/x = 0. Hence by Newton’s 3

rd law, the inward force of gauge bosons coming towards you from that mass is also zero; there is no action and so there is no reaction. As a result, the local mass shields you, creating an asymmetry. So you get pushed towards the shield. This is why apples fall.

3.

The universe empirically looks similar in all directions around us: hence the net unshielded gravity force equal to the total inward force,

F = ma ~ mcH, multiplied by the proportion of the shielded area of a spherical surface around the observer (see diagram). The surface area of the sphere with radius

R (the average distance of the receding matter that is contributing to the inward gauge boson force) is 4 p

R 2. The ‘clever’ mathematical bit is that the shielding area of a local mass is projected on to this area by very simple geometry: the local mass of say the planet Earth, the centre of which is distance

r from you, casts a ‘shadow’ (on the distant surface 4 p

R 2) equal to its shielding area multiplied by the simple ratio (

R / r)

2. This ratio is very big. Because

R is a fixed distance, as far as we are concerned for calculating the fall of an apple or the ‘attraction’ of a man to the Earth, the most significant variable the 1/

r2 factor, which we all know is the Newtonian inverse square law of gravity. For two separate rigorous and full accurate treatments see

Proof.Clarifications to the nature of the vacuumIn the last couple of posts on this blog, some facts about the vacuum were clarified.

The strength of Coulomb's law binding atoms is the same in a bonfire as in a freezer. So the force mediators in the vacuum are quite distinct from temperature. You have to get to immense energydensity/temperature before the force mediating vacuum is even indirectly affected: Levine and Koltick's 1997

PRL paper shows you have to go to 90 GeV energy justto get a 7% increase in Coulomb's law, and that results from breaking through the polarised vacuum around charge cores and exposing more of the(stronger) core charge.

Immediately after the big bang, the energy of collisions would have been strong enough to avert any streening by polarised charges of the vacuum (hence force unification). As time went on and the temperature fell, the vacuum is able to increasingly shield real charges by polarising around them, without collisions breakingthe vacuum structure. The very fact that you have to renormalise in QED toget meaningful results is evidence of structure in the vacuum. If it was a100% chaotic gas, it wouldn't be likely to be capable of much polarisation(which implies some measure of order). I've done a lot on this subject at

http://feynman137.tripod.com/.

Unlike mainstream people (such as stringy theorists Professor Jacques Distler, Lubos Motl, and other loud 'crackpots'), I don't hold on to any concepts in physics as religious creed. When I state big bang, I'm referring only to those few pieces of evidence which are secure, not to all the speculative conjectures which are usually glued to it by the high priests.

For example, inflationary models are speculative conjectures, as is the current mainstream mathematical description, the Lambda CDM (Lambda-Cold Dark Matter) model:

http://en.wikipedia.org/wiki/Lambda-CDM_modelThat model is false as it fits the general relativity field equation to observations by adding unobservables (dark energy, dark matter) which would imply that 96 % of the universe is invisible; and this 96 % is nothing to do with spacetime fabric or aether, it is supposed to speed up expansion to fit observation and bring the matter up to critical density.

There is no cosmological constant: lambda = zero, nada, zilch. See Prof. Phil Anderson who happens to be a Nobel Laureate (if you can't evaluate science yourself and instead need or want popstar type famous expert personalities with bells and whistles to announce scientific facts to you): 'the flat universe is

just not decelerating, it isn't really accelerating' -

http://cosmicvariance.com/2006/01/03/danger-phil-anderson/ Also my comment there

http://cosmicvariance.com/2006/01/03/danger-phil-anderson/#comment-16222The 'acceleration' of the universe observed is the failure of the 1998 mainstream model of cosmology to predict the lack of gravitational retardation in the expansion of distant matter (observations were of distant supernovae, using automated CCD telescope searches).

Two years before the first experimental results proved it, back in October 1996, Electronics World published (via the letters column) my paper which showed that the big bang prediction was false, because there is no gravitational retardation of distant receding matter. This prediction comes very simply from the pushing mechanism for gravity in the receding universe context.

Virtual particles exist in the vacuum without becoming real electron-positron pairs because they don't have enough energy to become real in the sense of escaping annihilation after a time ofthe order (Planck's constant)/(product of energy and twice pi). Random fluctuations in the local energy density of the vacuum, caused by variation in the abundance of force field mediators, statistically allow pairs of oppositely charged particles to appear for a brief, transient period. You could call the vacuum electrons 'transient electrons' instead ofvirtual electrons; it would be confusing to call them the same name as real ones. Karl Popper pointed out in his 1934

Logic of ScientificDiscovery that the Hesienberg uncertainty principle is a natural scattering relationship.

If you have a gas which is 50% positrons and 50% electrons, electron-electron collisions will result in scatter while electron-positroncollisions result in annihilation and production of radiation. Thisphysical process is has a completely causal mechanism for the resulting statistical. It is a purely causal effect. No hocus pocus. If you don't like the word virtual, you're not saying anything scientific, and if you think the immensenumber of transient electrons and positrons in the vacuum should be confusedwith what people think of electrons (bound to atoms, emitted from nuclei as beta particles, etc.), then you're causing confusion. Similarly to you, the mainstream decision to use the word vacuum to loosely refer to an empty volume as well as the spacetime fabric within a given volume of apparently empty space is unhelpful to understanding general relativity, quantum field theory, etc. Calculations ("Shut up and calculate" - Feynman) such as I do at

http://feynman137.tripod.com/ get suppressed as a result.

I've shown on my home page how you predict the masses of neutron, proton (quark based) and other particles. There are going to be far more virtual electrons and positrons than anything else according to Heisenberg's law, since the product of the uncertainities in energy and time is a fixed minimum fraction of Planck's constant, and the next charged particle after the electron is 206 times as massive (the muon). Therefore, assuming all particles are equally likely to appear in the vacuum, then Heisenberg's energy-time relatiohship says that

at any given time the vacuum is almost entirely composed of charges with the least mass-energy, i.e., electrons; it will only contain about 0.5 % as many muons as electrons, and all other (even heavier) virtual charged particles will exist in abundances below 0.5 % of the electron abundance.

So the virtual charges of the vacuum are almost entirely electrons and positrons. I'm not the only one with suppression problems on this, apparently. Even Professor Koltick, who published the 1997 heuristic experimental QFT work in

PRL, has apparently had difficulties:

From: "Guy Grantham" <

epola@tiscali.co.uk>

To: "Nigel Cook" <

nigelbryancook@hotmail.com>; "ivor catt" <

ivorcatt@electromagnetisn.demon.co.uk>; "Ian Montgomery" <

imontgomery@atlasmeasurement.com.au>; "David Tombe" <

sirius184@hotmail.com>; <

bdj10@cam.ac.uk>; <

forrestb@ix.netcom.com>

Cc: <

ivorcatt@hotmail.com>; <

ernest@cooleys.net>; <

Monitek@aol.com>; <

ivor@ivorcatt.com>; <

andrewpost@gmail.com>; <

geoffrey.landis@sff.net>; <

jvospost2@yahoo.com>; <

jackw97224@yahoo.com>; <

graham@megaquebec.net>; <

pwhan@atlasmeasurement.com.au>

Sent: Thursday, May 04, 2006 5:03 PM

Subject: Re: 137

Nigel wrote:

> 'All charges are surrounded by clouds of virtual photons, which spend part> of their existence dissociated into fermion-antifermion pairs. The virtual> fermions with charges opposite to the bare charge will be, on average,> closer to the bare charge than those virtual particles of like sign. Thus,> at large distances, we observe a reduced bare charge due to this screening> effect.' - I. Levine, D. Koltick, et al., Physical Review Letters, v.78,> 1997, no.3, p.424.David Koltick told me he lost his DofE funding and had to his change area of work over his premature publication of the summary of research on return from Kamiokande, Japan when he announced "....Many physicists have speculated that when and if this is determined, an entirely new and unique > physics may be discovered.... "

http://www.purdue.edu/UNS/html4ever/970110.Koltick.electron.html Regards, Guy

My email had stated:

The 137 factor arises in many ways, not merely because the orbital speed of the electron [in the ground state of a hydrogen atom] is c/137. Two examples:

(a) 'It had been an audacious idea that particles as small as electronscould have spin and, indeed, quite a lot of it... the 'surface of the electron' would have to move 137 times as fast as the speed of light. Nowadays such objections are simply ignored.' - Professor Gerard t'Hooft,

In Search of the Ultimate Building Blocks, Cambridge University Press, 1997, p27

(b) Heisenberg's uncertainty says pd = h/(2.Pi), where p is uncertainty in momentum, d is uncertainty in distance. This comes from his imaginary gamma ray microscope, and is usually written as a minimum (instead of with "=" asabove), since there will be other sources of uncertainty in the measurement process.

For light wave momentum p = mc, pd = (mc)(ct) = Et

where E is uncertainty in energy (E=mc2), and t is uncertainty in time.

Hence, Et =h/(2.Pi), so t = h/(2.Pi.E), so d/c = h/(2.Pi.E)d = hc/(2.Pi.E).

This result is used to show that a 80 GeV energy W or Z gauge boson will have a range of10^-17 m.

So it's OK. Now, E = Fd implies d = hc/(2.Pi.E) = hc/(2.Pi.Fd).

Hence F = hc/(2.Pi.d^2). This force is 137.036 times higher than Coulomb'slaw for unit fundamental charges. Notice that in the last sentence I've suddenly gone from thinking of d as an uncertainty in distance, to thinking of it as actual distance between two charges; but the gauge boson has to gothat distance to cause the force anyway.

'... the Heisenberg formulae can be most naturally interpreted as statisticalscatter relations, as I proposed [in the 1934 German publication,

The Logic of Scientific Discovery]... There is, therefore, no reason whatever to accept either Heisenberg's or Bohr's subjectivist interpretation of quantum mechanics.' - Sir Karl R. Popper,

Objective Knowledge, Oxford University Press, 1979, p. 303.

Note: statistical scatter gives the energy form ofHeisenberg's equation, since the vacuum is full of gauge bosons carrying momentum like light, and exerting vast pressure; this gives the foam vacuum. Clearly what's physically happening is that the true force is 137.036 times Coulomb's law, so the real charge is ~137.036e. All the detailed calculations of the Standard Model are really modelling are the vacuum processes for different types of virtual particles and gauge bosons. The whole mainstream way of thinking about the Standard Model is related to energy.

What is really happening is that at higher energies you knock particles together harder, so their protective shield of polarised vacuum particles gets partially breached, and you can experience a stronger force mediated by different particles! This is reduced by the correction factor 1/137.036 because most of the charge is screened out by polarised charges in the vacuum around the electron core.

'All charges are surrounded by clouds of virtual photons, which spend part of their existence dissociated into fermion-antifermion pairs. The virtual fermions with charges opposite to the bare charge will be, on average, closer to the bare charge than those virtual particles of like sign. Thus, at large distances, we observe a reduced bare charge due to this screening effect.' - I. Levine, D. Koltick, et al.,

Physical Review Letters, v.78, 1997, no.3, p.424.

Brian Josephson, a defender of stringy speculation, forgets to mention that string theory doesn't make any testable predictions, unlike Newtonian mechanics. He also fails to defend string theory using his own ESP theories as he attempted in his paper on arXiv.org at

http://arxiv.org/abs/physics/0312012: 'A model consistent with stringtheory is proposed for so-called paranormal phenomena such as extra-sensoryperception (ESP).'

For more on the 20-year failure of string theory/ESP doesn't convince Brian that it is

NOT EVEN WRONG. For the failure of string theory see Dr PeterWoit's blog called

NOT EVEN WRONG:

http://www.math.columbia.edu/~woit/wordpress/Brian is now being censored off arxiv by U. of Texas' Prof. Jacques Distler who controls/censors the hep-th part and also censors trackbacks from Dr Woit's blog. Both are censored, apparently, for causing an embarrassment to string theory.

Brian is too ridiculously pro-stringy religion, while Peter is too far the other way. Like Goldilocks, the

well respected Professor Distler likes his stringy porridge served

just right; neither too sweet or too sour.

The number 377 ohms is implied in the 1959 book Modern Physics:

'It has been supposed that empty space has no physical properties but onlygeometrical properties. No such empty space without physical properties hasever been observed, and the assumption that it can exist is withoutjustification. It is convenient to ignore the physical properties of spacewhen discussing its geometrical properties, but this ought not to haveresulted in the belief in the possibility of the existence of empty spacehaving only geometrical properties... It has specific inductive capacity andmagnetic permeability.' - Professor H.A. Wilson, FRS,

Modern Physics, Blackie & Son Ltd, London, 4th ed., 1959, p. 361.

'All charges are surrounded by clouds of virtual photons, which spend partof their existence dissociated into fermion-antifermion pairs. The virtualfermions with charges opposite to the bare charge will be, on average,closer to the bare charge than those virtual particles of like sign. Thus,at large distances, we observe a reduced bare charge due to this screeningeffect.' - I. Levine, D. Koltick, et al.,

Physical Review Letters, v.78,1997, no.3, p.424.

'Recapitulating, we may say that according to the general theory ofrelativity, space is endowed with physical qualities... According to thegeneral theory of relativity space without ether is unthinkable.' - AlbertEinstein, Leyden University lecture on 'Ether and Relativity', 1920.(Einstein, A.,

Sidelights on Relativity, Dover, New York, 1952, pp. 15, 16,and 23.)

'The Michelson-Morley experiment has thus failed to detect our motion through the aether, because the effect looked for - the delay of one of thelight waves - is exactly compensated by an automatic contraction of thematter forming the apparatus... The great stumbing-block for a philosophywhich denies absolute space is the experimental detection of absoluterotation.' - Professor A.S. Eddington (who confirmed Einstein's generaltheory of relativity in 1919), FRS,

Space Time and Gravitation, Cambridge University Press,Cambridge, 1921, pp. 20, 152.

'Some distinguished physicists maintain that modern theories no longerrequire an aether. I think all they mean is that, since we never have to dowith space and aether separately, we can make one word serve for both, and the word they prefer is 'space'.' - Prof. A.S. Eddington,

New Pathways in Science, v2, p39, 1935.

'The idealised physical reference object, which is implied in current quantum theory, is a fluid permeating all space like an aether.' - Sir Arthur S. Eddington, FRS,

Relativity Theory of Protons and Electrons, Cambridge University Press, Cambridge, 1936, p. 180.

'Looking back at the development of physics, we see that the ether, soonafter its birth, became the enfant terrible of the family of physicalsubstances... We shall say our space has the physical property of transmitting waves and so omit the use of a word we have decided to avoid. The omission of a word from our vocabulary is of course no remedy; the troubles are indeed much too profound to be solved in this way. Let us now write down the facts which have been sufficiently confirmed by experiment without bothering any more about the 'e---r' problem.' - Albert Einstein andLeopold Infeld,

Evolution of Physics, 1938, pp. 184-5.

So the contraction of the Michelson-Morley instrument made it fail to detectabsolute motion. This is why special relativity needs replacement with acausal general relativity:'. with the new theory of electrodynamics [vacuum filled with virtualparticles] we are rather forced to have an aether.' - Paul A. M. Dirac, 'IsThere an Aether?,'

Nature, v168, 1951, p906. (If you have a kid playing withmagnets, how do you explain the pull and push forces felt through space? As'magic'?) See also Dirac's paper in

Proc. Roy. Soc. v.A209, 1951, p.291.

'It seems absurd to retain the name 'vacuum' for an entity so rich in physical properties, and the historical word 'aether' may fitly be retained.' - Sir Edmund T. Whittaker,

A History of the Theories of the Aether and Electricity, 2nd ed., v1, p. v, 1951.

'Scientists have thick skins. They do not abandon a theory merely becausefacts contradict it. They normally either invent some rescue hypothesis toexplain what they then call a mere anomaly or, if they cannot explain theanomaly, they ignore it, and direct their attention to other problems. Note that scientists talk about anomalies, recalcitrant instances, not refutations. History of science, of course, is full of accounts of howcrucial experiments allegedly killed theories. But such accounts arefabricated long after the theory had been abandoned. ... What really countare dramatic, unexpected, stunning predictions: a few of them are enough totilt the balance; where theory lags behind the facts, we are dealing with miserable degenerating research programmes. Now, how do scientific revolutions come about? If we have two rival research programmes, and one is progressing while the other is degenerating, scientists tend to join the progressive programme. This is the rationale of scientific revolutions. ...Criticism is not a Popperian quick kill, by refutation. Important criticism is always constructive: there is no refutation without a better theory. Kuhnis wrong in thinking that scientific revolutions are sudden, irrational changes in vision. The history of science refutes both Popper and Kuhn: onclose inspection both Popperian crucial experiments and Kuhnian revolutions turn out to be myths: what normally happens is that progressive research programmes replace degenerating ones.' - Imre Lakatos,

Science and Pseudo-Science, pages 96-102 of Godfrey Vesey (editor),

Philosophy in the Open, Open University Press, Milton Keynes, 1974.

http://cosmicvariance.com/2006/05/04/bad-science-journalism:

Science on

May 6th, 2006 at 7:48 am“We’ve had a eighty freaking years of physicists, when explaining QM (and to a rather lesser extent relativity) aggressively preferring to explain things as “Gee Whiz, the universe is the strangest thing out there, far beyond the ken of the human mind” rather than “things are different when they are small; here’s what we understand; here’s what we don’t”. Who can be surprised when the chickens come home to roost, when journalists now accept whatever they’re fed uncritically?” - Maynard Handley

“When well-known and respected physicists are over-hyping their highly speculative and almost certainly wrong ideas, it can be difficult for non-specialists to see this and get the story right. … I’ve often been pleasantly surprised at how many journalists are able to see through the hype, sometimes even when many trained physicists don’t seem to be able to. While it would be great if journalists would always recognize hype for what it is and not repeat it uncritically, it also would be great if scientists would act more responsibly and not over-hype highly speculative ideas, either their own or other people’s.” - Peter Woit

I can’t believe these comments! Nobody sees through hype, it is part of the social culture of physics. Consensus is what mainstream science is all about when you get to untested or ad hoc models fitted to measurements. Few people are clear which parts of QFT or GR are 100% justified empirically. So you get all kinds of crazy extensions based on philosophical interpretations being defended by the accuracy to which you can calculate the magnetic moment of an electron …

Mark on

May 6th, 2006 at 3:00 pmOK boys, isn’t there some forum on which you could go bitch about professional physicists without hijacking one of our threads?

The time-dependent Schrodinger to Maxwell’s displacement current i = dD/dt. The former is just a quantized complex version of the latter. Treat the Hamiltonian as a regular quantity as Heaviside showed you can do for many operators. Then the solution to the time dependent Schroedinger equation is: wavefunction at time t after initial time = initial wavefunction.exp(-iHt/[h bar]). This is an general analogy to the exponential capacitor charging you get from displacement current. Maxwell’s displacement curent is i = dD/dt where D is product of electric field (v/m) and permittivity. Because the current flowing into the first capacitor plate falls off exponentially as it charges up, there is radio transmission transversely like radio from an antenna (radio power is proportional to the rate of charge of current in the antenna, which can be a capacitor plate). Hence the reality of displacement current is radio transmission. As each plate of a circuit capacitor acquires equal and opposite charge simultaneously, the radio transmission from each plate is an inversion of that from the other, so the superimposed signal strength away from the capacitor is zero at all times. Hence radio losslessly performs the role of induction which Maxwell attributed to aetherial displacement current. Schroedinger’s time-dependent equation says the product of the hamiltonian and wavefunction equals i[h bar].d[wavefunction]/dt, which is a general analogy to Maxwell’s i = dD/dt. It’s weird that people seem prejudiced against the reconciliation of classical and quantum electrodynamics. The Dirac equation is a relativized form of Schroedinger's equation. Why doesn’t anybody take the analogy of displacement current seriously?

COPY OF SOME NEW MATERIAL FROM http://feynman137.tripod.com/ (apologies that the equations will come out disjointed here and that there will be massive spaces around tables):

Wolfgang Pauli’s letter of Dec 4, 1930 to a meeting of beta radiation specialists in Tubingen:

‘Dear Radioactive Ladies and Gentlemen, I have hit upon a desperate remedy regarding ... the continuous beta-spectrum ... I admit that my way out may seem rather improbable a priori ... Nevertheless, if you don’t play you can’t win ... Therefore, Dear Radioactives, test and judge.’

(Quoted in footnote of page 12,

http://arxiv.org/abs/hep-ph/0204104 .)

Pauli's neutrino was introduced to maintain conservation of energy in observed beta spectra where there is an invisible energy loss. It made definite predictions. Contrast this to stringy SUSY today.

Although Pauli wanted renormalization in all field theories and used this to block non-renormalizable theories, others like Dirac opposed renormalization to the bitter end, even in QED where it was empirically successful! (See Chris Oakley’s home page.)

Gerald ‘t Hooft and Martinus Veltman in 1970 showed Yang-Mills theory is the only way to unify Maxwell's equations and QED, giving the U(1) group of the Standard Model.

In electromagnetism, the spin-1 photon interacts by changing the quantum state of the matter emitting or receiving it, via inducing a rotation in a Lie group symmetry. The equivalent theories for weak and strong interactions are respectively isospin rotation symmetry SU(2) and color rotation symmetry SU(3).

Because the gauge bosons of SU(2), SU(3) have limited range and therefore are massive, the field obviously carries most of the mass; so the field is there not just a small perturbation as it is in U(1).

Eg, a proton has a rest mass of 938 MeV but the three real quarks in it only contribute 11 MeV, so the field contributes 98.8 % of the mass. In QED, the field contributes only about 0.116 % of the magnetic moment of an electron.

I understand the detailed calculations involving renormalization; in the usual treatment of the problem there's an infinite shielding of a charge by vacuum polarisation at low energy, unless a limit or cutoff is imposed to make the charge equal the observed value. This process can be viewed as a ‘fiddle’ unless you can justify exactly why the vacuum polarisation is limited to the required value.

Hence Dirac’s reservations (and Feynman’s, too). On the other hand, just by one 'fiddle', it gives a large number of different, independent predictions like Lamb frequency shift, anomalous magnetic moment of electron etc.

The equation is simple (page 70 of

http://arxiv.org/abs/hep-th/0510040 ), for modeling one corrective Feynman diagram interaction. I've read Peter say (I think) that the other couplings which are progressively smaller (a convergent series of terms) for QED, instead become a divergent series for field theories with heavy mediators. The mass increase due to the field mass-energy is by a factor of 85 for the quark-gluon fields of a proton, compared to only a factor of 1.00116 for virtual charges interacting with a electron.

So even as a naive, ignorant layman I can see that there are many areas where the calculations of the Standard Model could be further studied, but string theory doesn't even begin to address them. Other examples: the masses and the electroweak symmetry breaking in the Standard Model are barely described by the existing speculative (largely unpredictive) Higgs mechanism.

Gravity, the ONE force hasn't even entered the Standard Model, is being tackled by string theorists, who - like babies - always want to try to run before learning to walk. Strings can't predict any features of gravity that can be compared to experiment. Instead, string theory is hyped as being perfectly compatible with unobserved, speculative gravitons, superpartners, etc. It doesn't even scientifically 'predict' the unnecessary gravitons or superpartners, because it can't be formulated in a quantitative way. Dirac and Pauli had predictions that were scientific, not stringy.

Dirac made exact predictions about antimatter. He predicted the rest mass-energy of a positron and the magnetic moment, so that quantitative comparisons could be done. There are no quantitative predictions at potentially testable energies coming out of string theory.

Theories that ‘predict’ unification at 1016 times the maximum energy you can achieve in an accelerator are not science.

I just love the fact string theory is totally compatible with special relativity, the one theory which has never produced a unique prediction that hasn't already been made by Lorentz, et al. based on physical local contraction of instruments moving in a fabric spacetime.

It really fits with the overall objective of string theory: the enforcement of genuine group-think by a group of bitter, mainstream losers.

Introduction to quantum field theory (the Standard Model) and General Relativity.

Mainstream ten or eleven dimensional ‘string theory’ (which makes no testable predictions) is being hailed as consistent with special relativity. Do mainstream mathematicians want to maintain contact with physical reality, or have they accidentally gone wrong due to ‘group-think’? What will it take to get anybody interested in the testable unified quantum field theory in this paper?

Peer-review is a sensible idea if you are working in a field where you have enough GENUINE peers that there is a chance of interest and constructive criticism. However string theorists have proved controlling, biased and bigoted group-think dominated politicians who are not simply ‘not interested in alternatives’ but take pride in sneering at things they don’t have time to read!

Dirac’s equation is a relativistic version of Schroedinger’s time-dependent equation. Schroedinger’s time dependent equation is a general case of Maxwell’s ‘displacement current’ equation. Let’s prove this.

First, Maxwell’s displacement current is i = dD/dt = e

.dE/dt In a charging capacitor, the displacement current falls as a function of time as the capacitor charges up, so: displacement current i = -e

.d(v/x)/dt, [equation 1]

Where E has been replaced by the gradient of the voltage along the ramp of the step of energy current which is entering the capacitor (illustration above). Here x is the step width, x = ct where t is the rise time of the step.

The voltage of the step is equal to the current step multiplied by the resistance: v = iR. Maxwell’s concept of ‘displacement current’ is to maintain Kirchhoff’s and Ohm’s laws of continuity of current in a circuit for the gap interjected by a capacitor, so by definition the ‘displacement current’ is equal to the current in the wires which is causing it.

Hence [equation 1] becomes:

i = -e

.d(iR/x)/dt = -(e

R/x).di/dt

The solution of this equation is obtained by rearranging to yield (1/i)di = -x.dt/(e

R), integrating this so that the left hand side becomes proportional to the natural logarithm of i, and the right hand side becomes -xt/(e

R), and making each side a power of e to get rid of the natural logarithm on the left side:

it = ioe- x t /( e

R ).

Now e

= 1/(cZ), where c is light velocity and Z is the impedance of the dielectric, so:

it = ioe- x c Z t / R.

Capacitance per unit length of capacitor is defined by C = 1/(xcZ), hence

it = ioe- t / RC.

Which is the standard capacitor charging result. This physically correct proof shows that the displacement current is a result of the varying current in the capacitor, di/dt, i.e., it is proportional to the acceleration of charge which is identical to the emission of electromagnetic radiation by accelerating charges in radio antennae. Hence the mechanism of ‘displacement current’ is energy transmission by electromagnetic radiation: Maxwell’s ‘displacement current’ i = e

.dE/dt by electromagnetic radiation induces the transient current it = ioe- t / RC. Now consider quantum field theory.

Schroedinger’s time-dependent equation is essentially saying the same thing as this electromagnetic energy mechanism of Maxwell’s ‘displacement current’: Hy

= iħ.dy

/dt = (½ih/p

)dy

/dt, where ħ = h/(2p

). The energy flow is directly proportional to the rate of change of the wavefunction.

The energy based solution to this equation is similarly exponential: y

t = y

o exp[-2p

iH(t – to)/h]

The non-relativistic hamiltonian is defined as:

H = ½ p2/m.

However it is of interest that the ‘special relativity’ prediction of

H = [(mc2)2 + p2c2]2,

was falsified by the fact that, although the total mass-energy is then conserved, the resulting Schroedinger equation permits an initially localised electron to travel faster than light! This defect was averted by the Klein-Gordon equation, which states:

ħ2d2y

/dt2 = [(mc2)2 + p2c2]y

,

While this is physically correct, it is non-linear in only dealing with second-order variations of the wavefunction.

Dirac’s equation simply makes the time-dependent Schroedinger equation (Hy

= iħ.dy

/dt) relativistic, by inserting for the hamiltonian (H) a totally new relativistic expression which differs from special relativity:

H = a

pc + b

mc2,

where p is the momentum operator. The values of constants a

and b

can take are represented by a 4 x 4 = 16 component matrix, which is called the Dirac ‘spinor’.

The justification for Dirac’s equation is both theoretical and experimental. Firstly, it yields the Klein-Gordon equation for second-order variations of the wavefunction. Secondly, it predicts four solutions for the total energy of a particle having momentum p:

E = ±

[(mc2)2 + p2c2]1/2.

Two solutions to this equation arise from the fact that momentum is directional and so can be can be positive or negative. The spin of an electron is ±

½ ħ = ±

½ h/(4p

). This explains two of the four solutions. The other two solutions are evident obvious when considering the case of p = 0, for then E = ±

mc2.

This equation proves the fundamental distinction between Dirac’s theory and Einstein’s special relativity. Einstein’s equation from special relativity is E = mc2. The fact that in fact E = ±

mc2, proves the physical shallowness of special relativity which results from the lack of physical mechanism in special relativity.

‘… Without well-defined Hamiltonian, I don’t see how one can address the time evolution of wave functions in QFT.’ - Eugene Stefanovich,

You can do this very nicely by grasping the mathematical and physical correspondence of the time-dependent Schrodinger to Maxwell’s displacement current i = dD/dt. The former is just a quantized complex version of the latter. Treat the Hamiltonian as a regular quantity as Heaviside showed you can do for many operators. Then the solution to the time dependent Schroedinger equation is: wavefunction at time t after initial time = initial wavefunction.exp(-iHt/[h bar])

This is an general analogy to the exponential capacitor charging you get from displacement current. Maxwell’s displacement curent is i = dD/dt where D is product of electric field (v/m) and permittivity. There is electric current in conductors, caused by the variation in the electric field at the front of a logic step as it sweeps past the electrons (which can only drift at a net speed of up to about 1 m/s) at light speed. Because the current flowing into the first capacitor plate falls off exponentially as it charges up, there is radio transmission transversely like radio from an antenna (radio power is proportional to the rate of charge of current in the antenna, which can be a capacitor plate). Hence the reality of displacement current is radio transmission. As each plate of a circuit capacitor acquires equal and opposite charge simultaneously, the radio transmission from each plate is an inversion of that from the other, so the superimposed signal strength away from the capacitor is zero at all times. Hence radio losslessly performs the role of induction which Maxwell attributed to aetherial displacement current. Schroedinger’s time-dependent equation says the product of the hamiltonian and wavefunction equals i[h bar].d[wavefunction]/dt, which is a general analogy to Maxwell’s i = dD/dt. The Klein-Gordon, and also Dirac’s equation, are relativized forms of Schroedinger’s time-dependent equation.

The Standard Model

Quantum field theory describes the relativistic quantum oscillations of fields. The case of zero spin leads to the Klein-Gordon equation. However, everything tends to have some spin. Maxwell’s equations for electromagnetic propagating fields are compatible with an assumption of spin h/(2p

), hence the photon is a boson since it has integer spin in units h/(2p

). Dirac’s equation models electrons and other particles that have only half unit spin, as known from quantum mechanics. These half-integer particles are called fermions and have antiparticles with opposite spin. Obviously you can easily make two electrons (neither the antiparticle of the other) have opposite spins, merely by having their spin axes pointing in opposite direction: one pointing up, one pointing down. (This is totally different from Dirac’s antimatter, where the opposite spin occurs while both matter and antimatter spin axes are pointed in the same direction. It enables the Pauli-pairing of adjacent electrons in the atom with opposite spins and makes most materials non-magnetic (since all electrons have a magnetic moment, everything would be potentially magnetic in the absence of the Pauli exclusion process.)

Two heavier, unstable (radioactive) relatives of the electron exist in nature: muons and tauons. They, together with the electron, are termed leptons. They have identical electric charge and spin to the electron, but larger masses, which enable the nature of matter to be understood, because muons and tauons decay by neutrino emission into electrons. Neutrinos and their anti-particle are involved in the weak force; they carry energy but not charge or detectable mass, and are fermions (they have half integer spin).

In addition to the three leptons (electron, muon, and tauon) there are six quarks in three families as shown in the table below. The existence of quarks is an experimental fact, empirically confirmed by the scattering patterns when high-energy electrons are fired at neutrons and protons. There is also some empirical evidence for the three colour charges of quarks in the fact that high-energy electron-positron collisions actually produce three times as many hadrons as predicted when assuming that there are no colour charges.

The 12 Fundamental Fermions (half integer spin particles) of the Standard Model

Electric Charge: |

+ 2e/3

Quarks |

0

Neutrinos |

- e/3

Quarks |

- e

Leptons |

Family 1: |

3 MeV u |

0 MeV v(e) |

5 MeV d |

0.511 MeV e |

Family 2: |

1200 MeV c |

0 MeV v(m

) |

100 MeV s |

105.7 MeV m

|

Family 3: |

174,000 MeV t |

0 MeV v(t

) |

4200 MeV b |

1777 MeV t

|

Notice that the major difference between the three families is the mass. The radioactivity of the muon (m

) and tauon (t

) can be attributed to these being high-energy vacuum states of the electron (e). The Standard Model in its present form cannot predict these masses. Family 1 is the vital set of fermions at low energy and thus, so far as human life is concerned at present. Families 2 and 3 were important in the high-energy conditions existing within a fraction of a second of the Big Bang event that created the universe. Family 2 is also important in nuclear explosions such as supernovae, which produce penetrating cosmic radiation that irradiates us through the earth’s atmosphere, along with terrestrial natural radioactivity from uranium, potassium-40, etc. The t (top) quark in Family 3 was discovered as recently as 1995. There is strong evidence from energy conservation and other indications that there are only three families of fermions in nature.

The Heisenberg uncertainty principle is often used as an excuse to avoid worrying about the exact physical interpretation of the various symmetries structures of the Standard Model quantum field theory: the wave function is philosophically claimed to be in an indefinite state until a measurement is made. Although, as Thmoas S. Love points out, this is a misinterpretation based on the switch-over of Schroedinger wave equations (time-dependent and time-independent) at the moment when a measurement is made on the system, it keeps the difficulties of the abstract field theory to a minimum. Ignoring the differences in the masses between the three families (which has a separate mechanism), there are four symmetry operations relating the Standard Model fermions listed in the table above:

- ‘flavour rotation’ is a symmetry which relates the three families (excluding their mass properties),

- ‘electric charge rotation’ would transform quarks into leptons and vice-versa within a given family,

- ‘colour rotation’ would change quarks between colour charges (red, blue, green), and

- ‘isospin rotation’ would switch the two quarks of a given family, or would switch the lepton and neutrino of a given family.

Hermann Weyl and Eugene Wigner discovered that Lie groups of complex symmetries represent quantum field theory.

Colour rotation leads directly to the Standard Model symmetry unitary group SU(3), i.e., rotations in imaginary space with 3 complex co-ordinates generated by 8 operations, the strong force gluons.

Isospin rotation leads directly to the symmetry unitary group SU(2), i.e., rotations in imaginary space with 2 complex co-ordinates generated by 3 operations, the Z, W+, and W- gauge bosons of the weak force.

In 1954, Chen Ning Yang and Robert Mills developed a theory of photon (spin-1 boson) mediator interactions in which the spin of the photon changes the quantum state of the matter emitting or receiving it via inducing a rotation in a Lie group symmetry. The amplitude for such emissions is forced, by an empirical coupling constant insertion, to give the measured Coulomb value for the electromagnetic interaction. Gerald ‘t Hooft and Martinus Veltman in 1970 argued that the Yang-Mills theory is the only model for Maxwell’s equations which is consistent with quantum mechanics and the empirically validated results of relativity. The photon Yang-Mills theory is U(1). Equivalent Yang-Mills interaction theories of the strong force SU(3) and the weak force SU(2) in conjunction with the U(1) force result in the symmetry group set SU(3) x SU(2) x U(1) which is the Standard Model. Here the SU(2) group must act only on left-handed spinning fermions, breaking the conservation of parity.

Mediators conveying forces are called gauge bosons: 8 types of gluons for the SU(3) strong force, 3 particles (Z, W+, W-) for the weak force, and 1 type of photon for electromagnetism. The strong and weak forces are empirically known to be very short-ranged, which implies they are mediated by massive bosons unlike the photon which is said to be lacking mass although really it carries momentum and has mass in a sense. The correct distinction is not concerned with ‘the photon having no rest mass’ (because it is never at rest anyway), but is concerned with velocity: the photon actually goes at light velocity while all the other gauge bosons travel slightly more slowly. Hence there is a total of 12 different gauge bosons. The problem with the Standard Model at this point is the absence of a model for particle masses: SU(3) x SU(2) x U(1) does not describe mass and so is an incomplete description of particle interactions. In addition, the exact mechanism which breaks the electroweak interaction symmetry SU(2) x U(1) at low energy is speculative.

If renormalization is kicked out by Yang-Mills, then the impressive results which depend on renormalisation (Lamb shift, magnetic moments of electron and muon) are lost. SU(2) and SU(3) are not renormalisable.

Gravity is of course required in order to describe mass, owing to Einstein’s equivalence principle that states that gravitational mass is identical to, and indistinguishable from, inertial mass. The existing mechanism for mass in the Standard Model is the speculative (non-empirical) Higgs field mechanism. Peter Higgs suggested that the vacuum contains a spin-0 boson field which at low energy breaks the electroweak symmetry between the photon and the weak force Z, W+ and W- gauge bosons, as well as causing all the fermion masses in the Standard Model. Higgs did not predict the masses of the fermions, only the existence of an unobserved Higgs boson. More recently, Rueda and Haish showed that Casimir force type radiation in the vacuum (which is spin-1 radiation, not the Higgs’ spin-0 field) explains inertial and gravitational mass. The problem is that Rueda and Haish could not make particle mass or force strength predictions, and did not explain how electroweak symmetry is broken at low energy. Rueda and Haish have an incomplete model. The vacuum has more to it than simply radiation, and may be more complicated than the Higgs field. Certainly any physical mechanism capable of predicting particle masses and force strengths must be sophisticated than the existing Higgs field speculations.

Many set out to convert science into a religion by drawing up doctrinal creeds. Consensus is vital in politics and also in teaching subjects in an orthodox way, teaching syllabuses, textbooks, etc., but this consensus should be confused for science: it doesn’t matter how many people think the earth is flat or that the sun goes around it. Things are not determined in science by what people think or what they believe. Science is the one subject where facts are determined by evidence and even absolute proof: which is possible, contrary to Popper’s speculation, see Archimedes’ proof of the law of buoyancy for example. See the letter from Einstein to Popper that Popper reproduces in his book The Logic of Scientific Revolutions. Popper falsely claimed to have disproved the idea that statistical uncertainty can emerge from a deterministic situation.

Einstein disproved Popper by the case of an electron revolving in a circle at constant speed; if you lack exact knowledge of the initial conditions and cannot see the electron, you can only statistically calculate the probability of finding it at any section of the circumference of the circle. Hence, statistical probabilities can emerge from completely deterministic systems, given merely the uncertainty about the initial conditions. This is one argument of many that Einstein (together with Schroedinger, de Broglie, Bohm, etc.) had to argue determinism lies at the heart of quantum mechanics. However, the nature of all real 3+ body interactions in classically ‘deterministic’ mechanics are non-deterministic because of the perturbations introduced as chaos by more than two bodies. So there is no ultimate determinism in the real world many-body situations. What Einstein should have stated he was looking for is causality, not determinism.

The uncertainty principle - which is just modelling scattering-driven reactions - shows that the higher the mass-energy equivalent, the shorter the lifetime.

Quarks and other heavy particles last for a fraction of 1% of the time that electrons and positrons in the vacuum last before annihilation. The question is, why do you get virtual quarks around real quark cores for QCD and virtual electrons and positrons around real electron cores. It is probably a question of the energy density of the vacuum locally around a charge core. The higher energy density due to the fields around a quark will both create and attract more virtual quarks than an electron that has weaker fields.

In the case of a nucleon, neutron or proton, the quarks are close enough that the strong core charge, and not the shielded core charge (reduced by 137 times due to the polarised vacuum), is responsible for the inter-quark binding force. The strong force seems to be mediated by eight distinct types of gluon. (There is a significant anomaly in the QCD theory here because there are physically 9 types of green, red, blue gluons but you have to subtract one variety from the 9 to rule out a reaction which doesn't occur in reality.

The gluon clouds around quarks are overlapped and modified by the polarised veils of virtual quarks, which is why it is just absurd to try to get a mathematical solution to QCD in the way you can for the simpler case of QED. In QED, the force mediators (virtual photons) are not affected by the polarised electron-positron shells around the real electron core, but in QCD there is an interaction between the gluon mediators and the virtual quarks.

You have to think also about electroweak force mediators, the W, Z and photons, and how they are distinguished from the strong force gluons. For the benefit of cats who read, W, Z and photons have more empirical validity than Heaviside's energy current theory speculation; they were discovered experimentally at CERN in 1983. At high energy, the W (massive and charged positive or negative), Z (massive and neutral), and photon all have symmetry and infinite range, but below the electroweak unification energy, the symmetry is broken by some kind of vacuum attenuation (Higgs field or other vacuum field miring/shielding) mechanism, so W and Z's have a very short range but photons have an infinite range. To get unification qualitatively as well as quantitatively you have to not only make the extremely high

energy forces all identical in strength, but you need to consider qualitatively how the colored gluons are related to the W, Z, and photon of electroweak theory. The Standard Model particle interaction symmetry

groupings are SU(3) x SU(2) x U(1), where U(1) describes the photon, SU(2) the W and Z of the weak force, hence SU(2) x U(1) is electroweak theory requiring a Higgs or other symmetry breaking mechanism to work, and SU(3) describes gluon mediated forces between three strong-force color charges of

quarks, red, green and blue or whatever.

The problem with the gluons having 3 x 3 = 9 combinations but empirically only requiring 8 combinations, does indicate that the concept of the gluon is not the most solid part of the QCD theory. It is more likely that the gluon force is just the unshielded core charge force of any particle (hence unification at high energy where the polarised vacuum is breached by energetic collisions). (The graviton I've proved to be a fiction; it is the same as the gauge boson photon of electromagnetism: it does the work of both all attractive force and a force root N times stronger which is attractive between unlike charges and repulsive between like charges. N is the number of charges exchanging force mediator radiation. This proves why the main claim of string theory is entirely false. There is no separate graviton.)

The virtual quarks as you say contribute to the (1) mass and (2) magnetic moment of the nucleon. In the same way, virtual electrons increase the magnetic moment of the electron by 0.116% in QED. QCD just involves a larger degree of perturbation due to the aether than QED does.

Because the force mediators in QCD interact with the virtual quarks of the vacuum appreciably, the Feynman diagrams indicate a very large number of couplings with similar coupling strengths in the vacuum that are almost impossible to calculate. The only way to approach this problem is to dump perturbative field theory and build a heuristic semi-classical model which is amenable to computer solution. Ie, you can simulate quarks and polarised clouds of virtual charges surrounding them using a classical model adjusted

to allow for the relative quantum mechanical lifetimes of the various virtual particles, etc. QCD has much larger perturbative effects due to the vacuum, with the vacuum in fact contributing most of the properties

attributed to nucleons. In the case of a neutron, you would naively expect there to be zero magnetic moment because there is no net charge, but in fact there is a magnetic moment about two thirds the size of the proton's.

Ultimately what you have to do, having dealt with gravity (at least showing electrogravity to be a natural consequence of the big bang), is to understand the Standard Model. In order to do that, the particle masses and force coupling strengths must be predicted. In addition, you want to understand more about electroweak symmetry breaking, gluons, the Higgs field - if it actually exists as is postulated (it may be just a false model based on ignorant speculation, like graviton theory) - etc. I know string theory critic Dr Peter Woit (whose book Not Even Wrong - The Failure of String Theory and the Continuing Challenge to Unify the Laws of Physics will be published in London on 1 June and in the USA in September) claims in

Quantum Field Theory and Representation Theory: A Sketch (2002),

http://arxiv.org/abs/hep-th/0206135 that it is potentially possible to deal with electroweak symmetry without the usual stringy nonsense.

Hydrogen doesn’t behave as a superfluid. Helium is a superfluid at low temperatures because it has two spin ½ electrons in its outer shell that collectively behave as a spin 1 particle (boson) at low temperatures.

Fermions have half integer spin so hydrogen with a single electron will not form boson-like electron pairs. In molecular H2, the two electrons shared by the two protons don't have the opportunity to couple together

to form a boson-like unit. It is the three body problem, two protons and a coupled pair of electrons, so perturbative effects continuously break up any boson like behavior.

The same happens to helium itself when you increase temperature above superfluid temperature. The kinetic energy added breaks the electron pairing to form a kind of boson. So just the Pauli exclusion principle pairing remains at higher temperature.

You have to think of the low-temperature bose-einstein condensate as the simple case, and to note that at higher temperatures chaos breaks it up. Similarly, if you heat up a magnet you increase entropy, introducing chaos by allowing the domain order to be broken up by random jiggling of particles.

Newton's Opticks & Feynman's QED book both discuss the reflection of a photon of light by a sheet of glass depends on the thickness, but the photon is reflected as if from the front face. Newton as always fiddled an explanation based on metaphysical "fits of reflection/transmission" by light, claiming that light actually causes a wave of some sort (aetherial according to Newton) in the glass which travels with the light and controls reflection off the rear surface.

Actually Newton was wrong because you can measure the time it takes for the reflection from a really thick piece of glass, and that shows the light reflects from the front face. What is happening is that energy (gauge boson electromagnetic energy) is going at light speed in all directions within the glass normally, and is affected by the vibration of the crystalline lattice. The normal internal "resonate frequency" depends on the exact thickness of the glass, and this in turn determines the probability that a light hitting the front face is reflected or transmitted. It is purely causal.

Electrons have quantized charge and therefore electric field - hardly a description of the "light wave" we can physically experiment with and measure as 1 metre (macroscopic) wavelength radio waves. The peak electric field of radio is directly proportional to the orthagonal acceleration of the electrons which emit it. There is no evidence that the vacuum charges travel at light speed in a straight line. An electron is a trapped negative electric field. To go at light speed it's spin would have to be annihilated by a positron to create gamma rays. Conservation of angular momentum forbids an electron from going at light speed as the spin is light speed and it can't maintain angular momentum without being supplied with increasing energy as the overall propagation speed rises. Linear momentum and angular momentum are totally separate. It is impossible to have an electron and positron pair going at light speed, because the real spin angular momentum would be zero, because the total internal speed can't exceed c, and it is exactly c if electrons are electromagnetic energy in origin (hence the vector sum of propagation and spin speeds - Pythagoras' sum of squares of speeds law if the propagation and spin vectors are orthagonal - implies that the spin slows down from c towards 0 as electron total propagation velocity increases from 0 towards c). The electron would therefore have to be supplied with increasing mass-energy to conserve angular momentum as it is accelerated towards c.

People like Josephson take the soft quantum mechanical approach of ignoring spin, assuming it is not real. (This is usually defended by confusing the switch over from the time-dependent to time-independent versions of the Schrodinger equation when a measurement is taken, which defends a metaphysical requirement for the spin to remain indeterminate until the instant of being measured. However, Love of California State Uni proves that this is a mathematical confusion between the two versions of Schrodinger's equation and is not real physical phenomena. It is very important to be specific where the errors in modern physics are, because most of it is empirical data from nuclear physics. Einstein's special relativity isn't worshipped for enormous content, but for fitting the facts. The poor presentation of it as being full of amazing content is crazy. It is respected by those who understand it because it has no content and yet produces empirically verifiable formulae for local mass variation with velocity, local time - or rather uniform motion - rate variation, E=mc2 etc. Popper's analysis of everything is totally bogus; he defends special relativity as being a falsifiable theory which it isn't as it was based on empirical observations; special relativity is only a crime for not containing a mechanism and for not admitting the change in philosophy. Similarly, quantum theory is correct so far as the equations are empirically defensible to a large degree of accuracy, but it is a crime to use this empirical fit to claim that mechanisms or causality don't exist.)

The photon certainly has electromagnetic energy with separated negative and positive electric fields. Question is, is the field the cause of charge or vice-versa? Catt says the field is the primitive. I don't like Catt's arguments for the most part (political trash) but he has some science mixed in there too, or at least Dr Walton (Catt's co-author) does. Fire 2 TEM (transverse electromagnetic) pulses guided by two conductors through one another from opposite directions, and there is no measurable resistance while the pulses overlap. Electric current ceases. The primitive is the electromagnetic field.

To model a photon out of an electron and a positron going at light velocity is false for the reasons I've given. If you are going to say the electron-positron pairs in the vacuum don't propagate with light speed, you are more sensible, as that will explain why light doesn't scatter around within the vacuum due to the charges in it hitting other vacuum charges, etc. But then you are back to a model in which light moves like transverse (gravity) surface waves in water, but with the electron-positron ether as the sea. You then need to explain why light waves don't disperse. In a photon in the vacuum, the peak amplitude is always the same, no matter how far it goes from its source. Water waves, however, lose amplitude as they spread. Any pressure wave that propagates (sound, sonar, water waves) have an excess pressure and a rarefaction (under-normal pressure) component. If you are going to claim instead that a displacement current in the aether is working with Faraday's law to allow light propagation, you need to be scientific and give all the details, otherwise you are just repeating what Maxwell said - with your own gimmicks about dipoles and double helix speculation - 140 years ago.

Maxwell's classical model of a light wave is wrong for several reasons.

Maxwell said light is positive and negative electric field, one behind the other (variation from positive to negative electric field occurring along the direction of propagation). This is a longitudinal wave, although it was claimed to be a transverse wave because Maxwell's diagram, in TEM (Treatise on Electricity & Magnetism) plots strength of E field and B field on axes transverse to the direction of propagation. However, this is just a graph which does not correspond to 3 dimensional space, just to fields in 1 dimensional space, the x direction. The axes of Maxwell's light wave graph are x direction, E field strength, B field strength. If Maxwell had drawn axes x, y, z then he could claim to have shown a transverse wave. But he didn't, he had axes x, E, B: one dimensional with two field amplitude plots.

Heaviside's TEM wave guided by two conductors has the negative and positive electric fields one beside the other, ie, orthagonal to the direction of propagation. This makes more sense to me as a model for a light wave: Maxwell's idea of having the different electric fields of the photon (positive and negative) one behind the other is bunk, because both are moving forward at light speed in the x direction and so cannot influence one another (without exceeding light speed).

The major error of Catt is confusion over charge and electric current. He variously uses and dismisses these concepts. The facts are:

(1) An old style CRT television throws electrons at the screen. You can feel the charge because the hairs on the back of your hand and raised by the electric field. Put a Catt near a television and the fur will stand on end ...

(2) Similarly, all valves (whoops, vacuum tubes for US readers) work by electron flow from hot cathode to anode (electric current goes the other way to electron current due to Franklin's convention).

Where the mainstream is wrong is in not explaining why the current flow occurs. Take the Catt Question diagram. Ignore for the present the ramp part (which I deal with here: http://feynman137.tripod.com/ ).

How does an electron current flow in the flat-topped part (behind the step front or rather ramp) of the logic pulse where the voltage is the same value along the conductors?

Ivor Catt indicates in the middle of his book "Electromagnetism 1" that magnetic field interaction between the two opposite currents in the conductors allow the current.

This seems to be vital: it is not electric field gradient along the conductor which causes the current for situations behind the logic step front, but magnetic field! In a single wire you can't have a steady current flow because the magnetic field (self inductance) is infinite, but for a pair of nearby conductors carrying equal currents in opposite directions,the resultant curls curls of magnetic fields from each conductor at long distances cancel out perfectly so the inductance is then limited, allowing transmission of current.

To summarise: at the rise or ramp of the logic step, electric field (or voltage gradient) along the conductor (from 0 volts to the flat peak of v volts over distance x = ct where t is the rise-time) occurs. This electric field is E = v/x = v/(ct) volts/metre, which induces an electric current by accelerating passive 2s conduction electrons in the conductors to a peak net drift velocity (they are normally whizzing around at random at a speed of c/137). Once the flat v volts is attained, the electric field is no longer able to accelerate electrons to overcome resistance, but the magnetic field from the opposite conductor induces a current flow as per Catt "Electromagnetism 1" 1994.