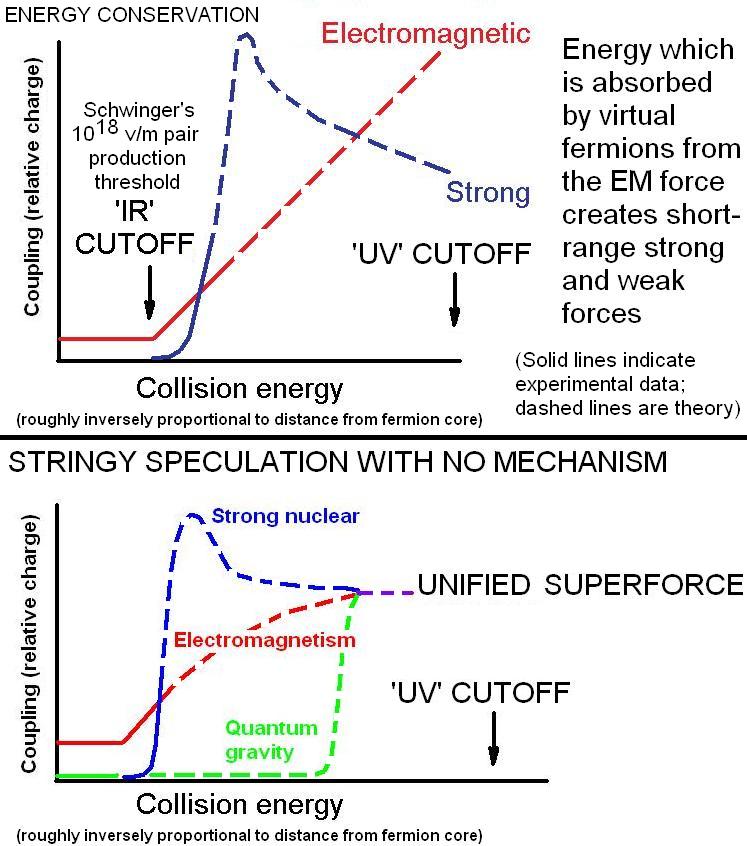

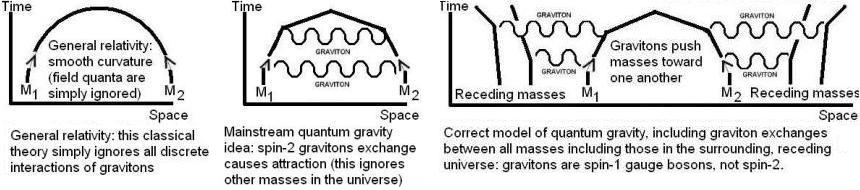

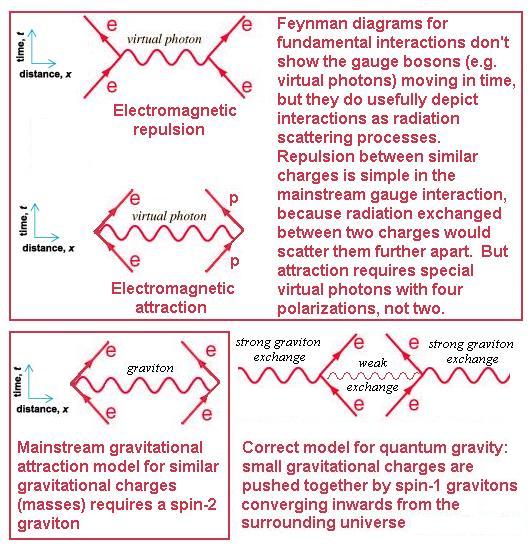

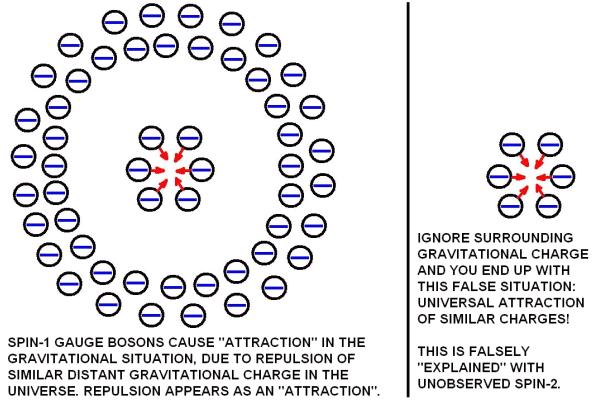

String 'theory' (abject uncheckable speculation) combines a non-experimentally justifiable speculation about forces unifying at the Planck scale, with another non-experimentally justifiable speculation that gravity is mediated by spin-2 particles which are only exchanged between the two masses in your calculation, and somehow avoid exchanging with the way bigger masses in the surrounding universe. When you include in your path integral the fact that exchange gravitons coming from distant masses will be converging inwards towards an apple and the earth, it turns out that this exchange radiation with distant masses actually predominates over the local exchange and pushes the apple down to the earth, so it is easily proved that gravitons are spin-1 not spin-2. The proof below also makes checkable predictions and tells us exactly how quantum gravity fits into the electroweak symmetry of the Standard Model alongside the other long range force at low energy, electromagnetism, thus altering the usual interpretation of the Standard Model symmetry groups and radically changing the nature of electroweak symmetry breaking from the usual poorly predictive mainstream Higgs field.

The entire mainstream modern physics band waggon has ignored Feynman's case for simplicity and understanding what is known for sure, and has gone off in the other direction (magical unexplainable religion) and built up a 10 dimensional superstring model whose conveniently 'explained' Calabi-Yau compactification of the unseen 6 dimensions can take 10500 different forms (conveniently explained away as a 'landscape' of unobservable parallel universes, from which ours is picked out using the anthropic principle that because we exist, the values of fundamental parameters we observe must be such that they allow our existence).

Professor Richard P. Feynman’s paper ‘Space-Time Approach to Non-Relativistic Quantum Mechanics’, Reviews of Modern Physics, volume 20, page 367 (1948), makes it clear that his path integrals are a censored explicit reformulation of quantum mechanics, not merely an extension to sweep away infinities in quantum field theory!

Richard P. Feynman explains in his book, QED, Penguin, 1990, pp. 55-6, and 84:

'I would like to put the uncertainty principle in its historical place: when the revolutionary ideas of quantum physics were first coming out, people still tried to understand them in terms of old-fashioned ideas ... But at a certain point the old fashioned ideas would begin to fail, so a warning was developed that said, in effect, "Your old-fashioned ideas are no damn good when ...". If you get rid of all the old-fashioned ideas and instead use the ideas that I’m explaining in these lectures – adding arrows [arrows = phase amplitudes in the path integral] for all the ways an event can happen – there is no need for an uncertainty principle! ... on a small scale, such as inside an atom, the space is so small that there is no main path, no "orbit"; there are all sorts of ways the electron could go, each with an amplitude. The phenomenon of interference [by field quanta] becomes very important ...'

Take the case of simple exponential decay: the mathematical exponential decay law predicts that the dose rate never reaches zero, so effective dose rate for exposure to an exponentially decaying source needs clarification: taking an infinite exposure time will obviously underestimate the dose rate regardless of the total dose, because any dose divided into an infinite exposure time will give a false dose rate of zero. Part of the problem here is that the exponential decay curve is false: it is based on calculus for continuous variations, and doesn't apply to radioactive decay which isn't continuous but is a discrete phenomenon. This mathematical failure undermines the interpretation of real events in quantum mechanics and quantum field theory, because discrete quantized fields are being falsely approximated by the use of the calculus, which ignores the discontinuous (lumpy) changes which actually occur in quantum field phenomena, e.g., as Dr Thomas Love of California State University points out, the 'wavefunction collapse' in quantum mechanics when a radioactive decay occurs is a mathematical discontinuity due to the use of continuously varying differential field equations to represent a discrete (discontinuous) transition!

[‘The quantum collapse [in the mainstream interpretation of quantum mechanics, where a wavefunction collapse occurs whenever a measurement of a particle is made] occurs when we model the wave moving according to Schroedinger (time-dependent) and then, suddenly at the time of interaction we require it to be in an eigenstate and hence to also be a solution of Schroedinger (time-independent). The collapse of the wave function is due to a discontinuity in the equations used to model the physics, it is not inherent in the physics.’ - Dr Thomas Love, Departments of Physics and Mathematics, California State University, ‘Towards an Einsteinian Quantum Theory’, preprint emailed to me.]

Alpha radioactive decay occurs when an alpha particle undergoes quantum tunnelling to escape from the nucleus through a 'field barrier' which should confine it perfectly, according to classical physics. But as Professor Bridgman explains, the classical field law falsely predicts a definite sharp limit on the distance of approach of charged particles, which is not observed in reality (in the real world, there is a more gradual decrease). The explanation for alpha decay and 'quantum tunnelling' is not that the mathematical laws are perfect and nature is 'magical and beyond understanding', but simply that the differential field law is just a statistical approximation and wrong at the fundamental level: electromagnetic forces are not continuous and steady on small scales, but are due to chaotic, random exchange radiation, which only averages out and approaches the mathematical 'law' over long distances or long times. Forces are actually produced by lots of little particles, quanta, being exchanged between charges.

On large scales, the effect of all these little particles averages out to appear like Coulomb's simple law, just as on large scales, air pressure can appear steady, when in fact on small scales it is a random bombardment of air molecules which cause Brownian motion. On small scales, such as the distance between an alpha particle and other particles in the nucleus, the forces are not steady but fluctuate as the field quanta are randomly and chaotically exchanged between the nucleons. Sometimes it is stronger and sometimes weaker than the potential predicted by the mathematical law. When the field confining the alpha particle is weaker, the alpha particle may be able to escape, so there is no magic to 'quantum tunnelling'. Therefore, radioactive decay only behaves the smooth exponential decay law as a statistical approximation for large decay rates. In general the exponential decay rate is false and for a nuclide of short half-life, all the radioactive atoms decay after a non-infinite time; the prediction of that 'law' that radioactivity continues forever is false.

There is a stunning lesson from human 'groupthink' arrogance today that Feynman's fact-based physics is still censored out by mainstream string theory, despite the success of path integrals based on this field quanta interference mechanism!

Regarding string theory, Feynman said in 1988:

‘... I do feel strongly that this is nonsense! ... I think all this superstring stuff is crazy and is in the wrong direction. ... I don’t like it that they’re not calculating anything. I don’t like that they don’t check their ideas. I don’t like that for anything that disagrees with an experiment, they cook up an explanation ... All these numbers [particle masses, etc.] ... have no explanations in these string theories - absolutely none!’

– Richard P. Feynman, in Davies & Brown, ‘Superstrings’ 1988, at pages 194-195.

Regarding reality, he said:

‘It always bothers me that, according to the laws as we understand them today, it takes a computing machine an infinite number of logical operations to figure out what goes on in no matter how tiny a region of space, and no matter how tiny a region of time. How can all that be going on in that tiny space? Why should it take an infinite amount of logic to figure out what one tiny piece of spacetime is going to do? So I have often made the hypothesis that ultimately physics will not require a mathematical statement, that in the end the machinery will be revealed, and the laws will turn out to be simple, like the chequer board with all its apparent complexities.’

- R. P. Feynman, The Character of Physical Law, November 1964 Cornell Lectures, broadcast and published in 1965 by BBC, pp. 57-8.

Mathematical physicist Dr Peter Woit at Columbia Univerity mathematics department has written a blog post reviewing a new book about Dirac, the discoverer of the Dirac equation, a relativistic wave equation which lies at the heart of quantum field theory (the Schroedinger equation of quantum mechanics is a good approximation for some low energy physics, but is not valid for relativistic situations, i.e. it doesn't ensure the field moves with the velocity of light while conserving mass-energy, so it is not a true basis for quantum field descriptions; additionally in quantum field theory but not in quantum mechanics math, pair-production occurs, i.e. "loops" in spacetime on Feynman diagrams, caused by particles and antiparticles briefly gaining energy to free themselves from the normally unobservable ground state of the vacuum of space or Dirac sea, before they annihilate and disappear again, analogous to steam temporarily evaporating from the ocean to create visible clouds which condense into droplets of rain and disappear again, returning back to the sea), http://www.math.columbia.edu/~woit/wordpress/?p=1904 where he writes:

‘As it become harder and harder to get experimental data relevant to the questions we want to answer, the guiding principle of pursuing mathematical beauty becomes more important. It’s quite unfortunate that this kind of pursuit is becoming discredited by string theory, with its claims of seeing “mathematical beauty” when what is really there is mathematical ugliness and scientific failure.’

In 1930 Dirac wrote:

‘The only object of theoretical physics is to calculate results that can be compared with experiment.’

- Paul A. M. Dirac, The Principles of Quantum Mechanics, 1930, page 7.

But he changed slightly in his later years and on 7 May 1963 Dirac actually told Thomas Kuhn during an interview:

‘It is more important to have beauty in one’s equations, than to have them fit experiment.’

- Dirac, ‘The Evolution of the Physicist’s Picture of Nature’, Scientific American, May 1963, 208, 47.

Other guys stuck to their guns:

‘… nature has a simplicity and therefore a great beauty.’

- Richard P. Feynman (The Character of Physical law, p. 173)

‘The beauty in the laws of physics is the fantastic simplicity that they have … What is the ultimate mathematical machinery behind it all? That’s surely the most beautiful of all.’

- John A. Wheeler (quoted by Paul Buckley and F. David Peat, Glimpsing Reality, 1971, p. 60)

‘If nature leads us to mathematical forms of great simplicity and beauty … we cannot help thinking they are true, that they reveal a genuine feature of nature.’

– Werner Heisenberg (http://www.ias.ac.in/jarch/jaa/5/3-11.pdf, page 2 here)

‘A theory is the more impressive the greater the simplicity of its premises is. The more different kinds of things it relates, and the more extended is its area of applicability.’

– Albert Einstein (in Paul Arthur Schilpp’s Albert Einstein: Autobiographical Notes, p. 31)

‘My work always tried to unite the true with the beautiful; but when I had to choose one or the other, I usually chose the beautiful.’

- Hermann Weyl (http://www.ias.ac.in/jarch/jaa/5/3-11.pdf, page 2 here)

Now in a new blog post, 'The Only Game in Town', http://www.math.columbia.edu/~woit/wordpress/?p=1917, Dr Woit quotes The First Three Minutes author and Nobel Laureate Steven Weinberg continuing to depressingly hype string theory using the poorest logic imaginable to New Scientist:

“It has the best chance of anything we know to be right,” Weinberg says of string theory. “There’s an old joke about a gambler playing a game of poker,” he adds. “His friend says, ‘Don’t you know this game is crooked, and you are bound to lose?’ The gambler says, ‘Yes, but what can I do, it’s the only game in town.’ We don’t know if we are bound to lose, but even if we suspect we may, it is the only game in town.”

Dr Woit then writes in response to a comment by Dr Thomas S. Love of California State University, asking Woit if he plans to write a sequel to his book Not Even Wrong:

'Someday I would like to write a technical book on representation theory, QM and QFT, but that project is also a long ways off right now.'

- Peter Woit, May 5, 2009 at 12:48 pm, http://www.math.columbia.edu/~woit/wordpress/?p=1917&cpage=1#comment-48177

Well, I need such a book now, but I'm having to make do with the information currently available in available lecture notes and published quantum field theory textbooks.

It may be interesting to compare the post below to the physically very impoverished situation five years ago when I wrote http://cdsweb.cern.ch/record/706468?ln=en which contains the basic ideas, but with various trivial errors and without the rigorous proofs, predictions, applications and diagrams which have since been developed. Note that Greek symbols in the text display in Internet Explorer with symbol fonts loaded but do not display in Firefox:

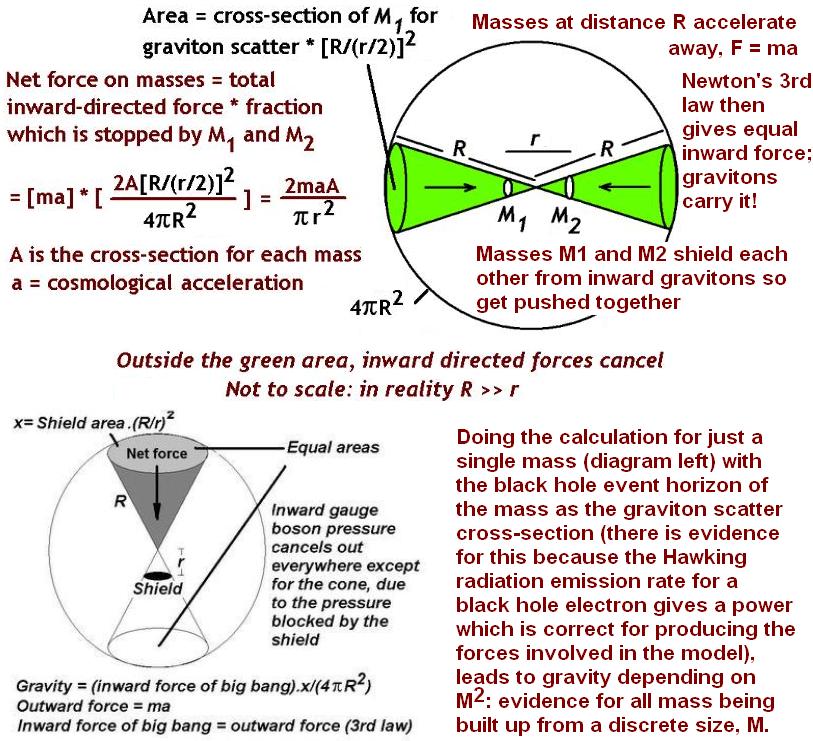

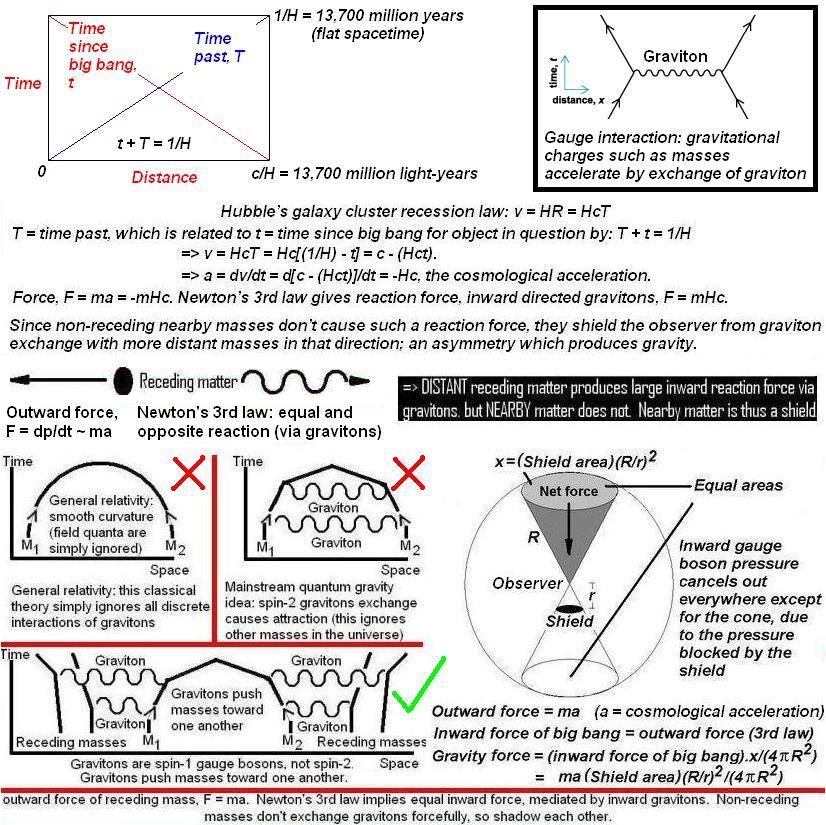

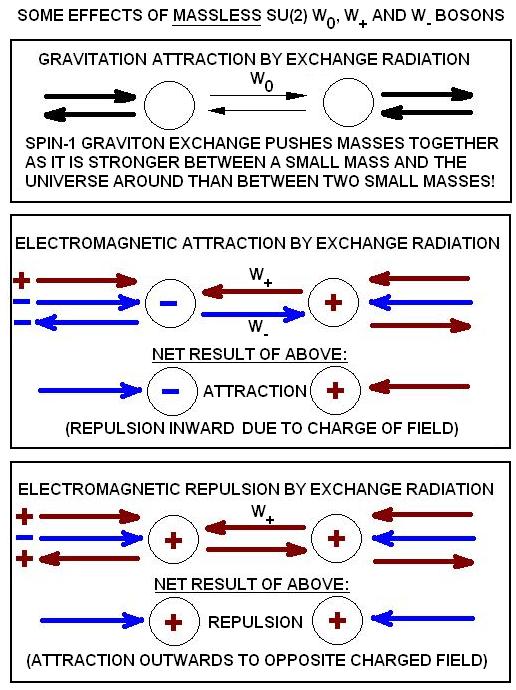

1. Masses that attract due to gravity are in fact surrounded by an isotropic distribution of distant receding masses in all directions (clusters of galaxies), so they must exchange gravitons with those distant masses as well as nearby masses (a fact ignored by the flawed mainstream path integral extensions of the Fierz-Pauli argument for gravitons having spin-2 in order for 'like' gravitational charges to attract rather than to repel which of course happens with like electric charges; see for instance pages 33-34 of Zee's 2003 quantum field theory textbook).

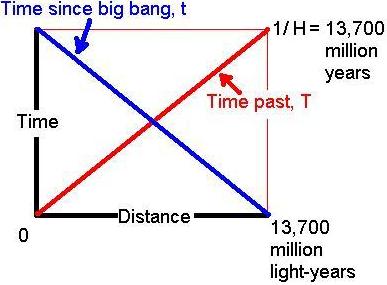

2. Because the isotropically distributed distant masses are receding with a cosmological acceleration, they have a radial outward force, which by Newton's 2nd law is F = ma, and which by Newton's 3rd law implies an equal inward-directed reaction force, F = -ma.

3. The inward-directed force, from the possibilities known in the Standard Model of particle physics and quantum gravity considerations, is carried by gravitons:

R in the diagram above is the distance to distant receding galaxy clusters of mass m. The distribution of matter around us in the universe can simply be treated as a series of shells of receding mass at progressively larger distances R, and the sum of contributions from all the shells gives the total inward graviton delivered force to masses.

This works for spin-1 gravitons, because:

a. the gravitons coming to you from distant masses (ignored completely by speculative spin-2 graviton hype) are radially converging upon you (not diverging), and

b. the distant masses are immense in size (clusters of galaxies) compared to local masses like the planet earth, the sun or the galaxy.

Consequently, the flux from distant masses is way, way stronger than from nearby masses; so the path integral of all spin-1 gravitons from distant masses reduces to the simple geometry illustrated above and will cause 'attraction' or push you down to the earth by shadowing (the repulsion between two nearby masses from spin-1 graviton exchange is trivial compared to the force pushing them together).

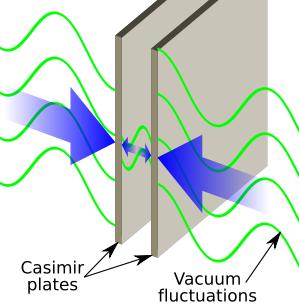

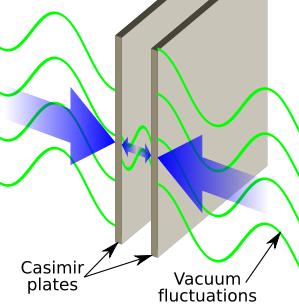

Above: an analogous effect well demonstated experimentally (the experimental data now matches the theory to within 15%) is the Casimir force where the virtual quantum field bosons of the vacuum push two flat metal surfaces together ('attractive' force) if they get close enough. The metal plates 'attract' because their reflective surfaces exclude virtual photons of wavelengths longer than the separation distance between the plates (the same happens with a waveguide, a metal box like tube which is used to pipe microwaves from the source magnetron to the disc antenna; a waveguide only carries wavelengths smaller than the width and breadth of the metal box!). The exclusion of long wavelengths of virtual radiation from the space between the metal places in the Casimir effect reduces the energy density between the plates compared with that outside, so that - just like external air pressure collapsing a slightly evacuated petrol can in the classic high school demonstration (where you put some water in a can and heat it so the can fills with steam with the cap off, then put the cap on and allow the can to cool so that the water vapour in it condenses, leaving a partial vacuum in the can) - the Casimir force pushes the plates toward one another. From this particular example of a virtual particle mediated force, note that virtual particles do have specific wavelengths! This is essentially important for redshift considerations when force-causing virtual particles (gauge bosons) are exchanged between receding matter in the expanding universe. 'String theorists' like Dr Lubos Motl have ignorantly stated to me that virtual particles can't be redshifted, claiming that they don't have any particular frequency or wavelength. [Illustration credit: Wikipedia.]

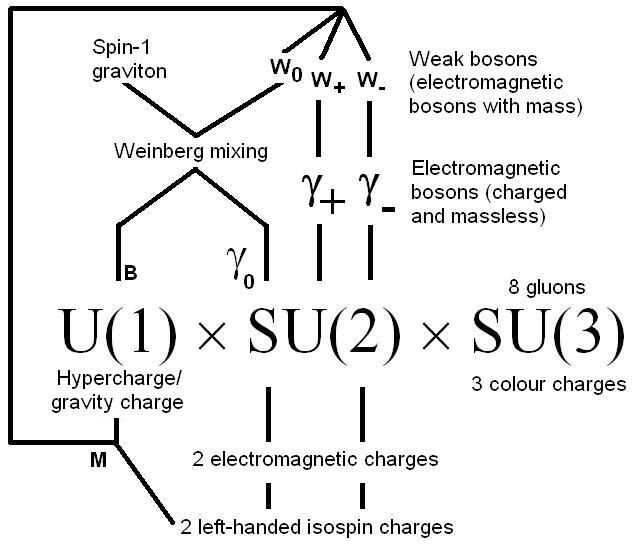

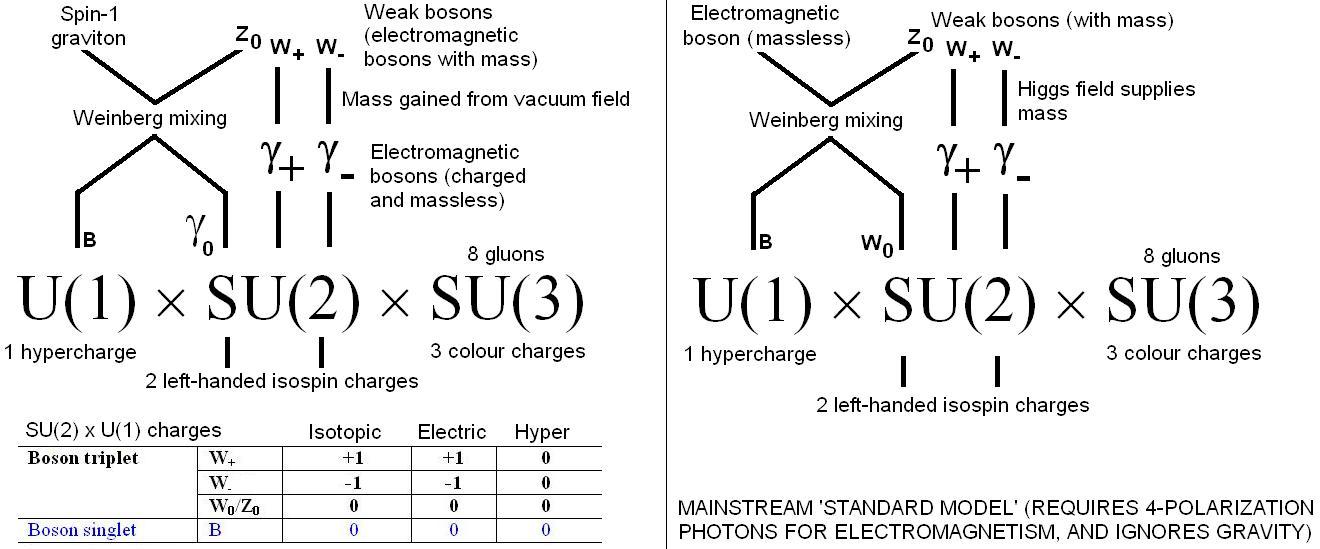

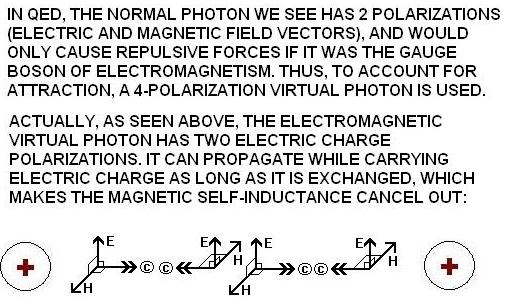

Above: an analogous effect well demonstated experimentally (the experimental data now matches the theory to within 15%) is the Casimir force where the virtual quantum field bosons of the vacuum push two flat metal surfaces together ('attractive' force) if they get close enough. The metal plates 'attract' because their reflective surfaces exclude virtual photons of wavelengths longer than the separation distance between the plates (the same happens with a waveguide, a metal box like tube which is used to pipe microwaves from the source magnetron to the disc antenna; a waveguide only carries wavelengths smaller than the width and breadth of the metal box!). The exclusion of long wavelengths of virtual radiation from the space between the metal places in the Casimir effect reduces the energy density between the plates compared with that outside, so that - just like external air pressure collapsing a slightly evacuated petrol can in the classic high school demonstration (where you put some water in a can and heat it so the can fills with steam with the cap off, then put the cap on and allow the can to cool so that the water vapour in it condenses, leaving a partial vacuum in the can) - the Casimir force pushes the plates toward one another. From this particular example of a virtual particle mediated force, note that virtual particles do have specific wavelengths! This is essentially important for redshift considerations when force-causing virtual particles (gauge bosons) are exchanged between receding matter in the expanding universe. 'String theorists' like Dr Lubos Motl have ignorantly stated to me that virtual particles can't be redshifted, claiming that they don't have any particular frequency or wavelength. [Illustration credit: Wikipedia.] Above: incorporation of quantum gravity, mass (without Higgs field) and charged electromagnetic gauge bosons into the Standard Model. Normally U(1) is weak hypercharge. The key point about the new model is that (as we will prove in detail below) the Yang-Mills equation applies to electromagnetism if the gauge bosons are charged, but is restricted to Maxwellian force interactions for massless charged gauge bosons due to the magnetic self-inductance of such massive charges. Magnetic self-inductance requires that charged massless gauge bosons must simultaneously be transferring equal charge from charge A to charge B as from B to A. In other words, only an exact equilibrium of exchanged charge per second in both directions between two charges is permitted, which prevents the Yang-Mills equation from changing the charge of a fermion: it is restricted to Maxwellian field behaviour and can only change the motion (kinetic energy) of a fermion. In other words, it can deliver net energy but not net charge. So it prevents the massless charged gauge bosons from transferring any net charge (they can only propagate in equal measure in both directions between charges so that the curling magnetic fields cancel, which is any equilibrium which can deliver field energy but cannot deliver field charge). Therefore, the Yang-Mills equation reduces for massless charged gauge bosons to the Maxwell equations, as we will prove later on in this blog post. The advantages of this model are many for the Standard Model, because it means that U(1) now does the job of quantum gravity and the 'Higgs mechanism', which are both speculative (untested) additions to the Standard Model, but does it in a better way, leading to predictions that can be checked experimentally.

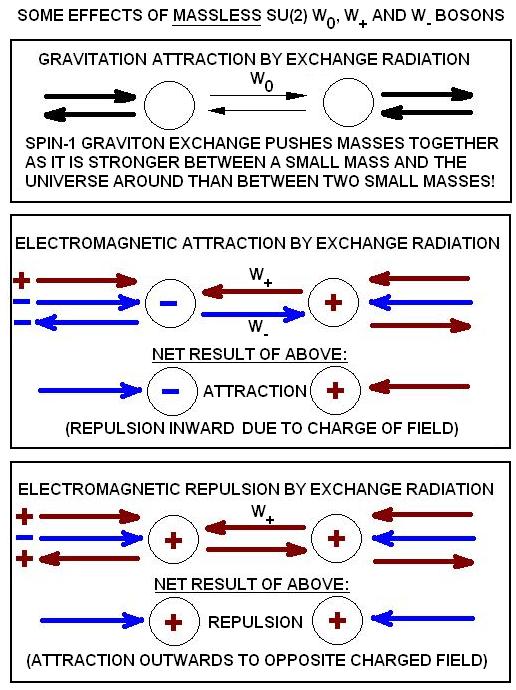

Above: incorporation of quantum gravity, mass (without Higgs field) and charged electromagnetic gauge bosons into the Standard Model. Normally U(1) is weak hypercharge. The key point about the new model is that (as we will prove in detail below) the Yang-Mills equation applies to electromagnetism if the gauge bosons are charged, but is restricted to Maxwellian force interactions for massless charged gauge bosons due to the magnetic self-inductance of such massive charges. Magnetic self-inductance requires that charged massless gauge bosons must simultaneously be transferring equal charge from charge A to charge B as from B to A. In other words, only an exact equilibrium of exchanged charge per second in both directions between two charges is permitted, which prevents the Yang-Mills equation from changing the charge of a fermion: it is restricted to Maxwellian field behaviour and can only change the motion (kinetic energy) of a fermion. In other words, it can deliver net energy but not net charge. So it prevents the massless charged gauge bosons from transferring any net charge (they can only propagate in equal measure in both directions between charges so that the curling magnetic fields cancel, which is any equilibrium which can deliver field energy but cannot deliver field charge). Therefore, the Yang-Mills equation reduces for massless charged gauge bosons to the Maxwell equations, as we will prove later on in this blog post. The advantages of this model are many for the Standard Model, because it means that U(1) now does the job of quantum gravity and the 'Higgs mechanism', which are both speculative (untested) additions to the Standard Model, but does it in a better way, leading to predictions that can be checked experimentally.In the case of electromagnetism, like charges repel due to spin-1 virtual photon exchange, because the distant matter in the universe is electrically neutral (equal amounts of charge of positive and negative sign at great distances cancel). This is not the case for quantum gravity, because the distant masses have the same gravitational charge sign, say positive, as nearby masses (there is only one observed sign for all gravitational charges!). Hence, nearby like gravitational charges are pushed together by gravitons from distant masses, while nearby like electric charges are pushed apart by exchange spin-1 photons with one another but not significantly by virtual photon exchanges with distant matter (due to that matter being electrically neutral).

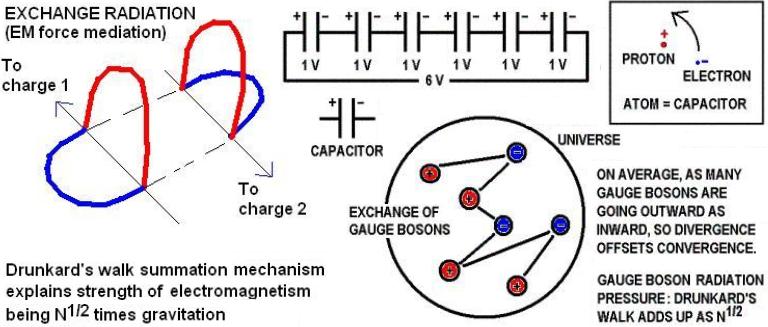

The new model has particular advantages to electromagnetism and leads to quantitative predictions of the masses of particles, and predicting the force coupling strengths for the various interactions. E.g., as shown in previous posts, a random walk of charged electromagnetic gauge bosons between similar charges randomly scattered around the universe gives a path integral with a total force coupling that is 1040 times that from quantum gravity, and so it predicts quantitatively how electromagnetism is stronger than gravity.

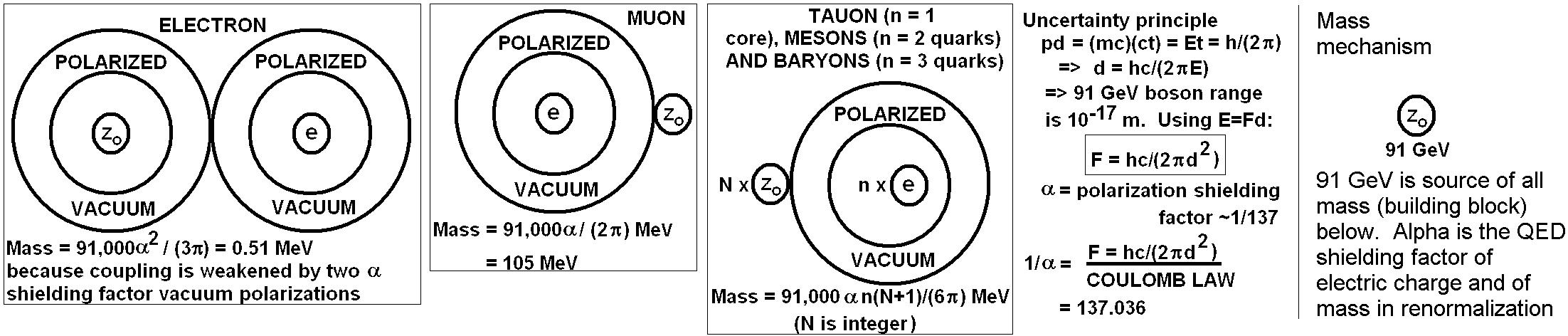

Above: If a discrete number of fixed-mass gravitational charges clump around each fermion core, 'miring it' like treacle, you can predict all lepton and hadron inertial and gravitational masses. The gravitational charges have inertia because they are exchanging gravitons with all other masses around the universe, which physically holds them where they are (if they move, they encounter extra pressure from graviton exchange in the direction of their motion, which causes contraction, requiring energy; hence resistance to acceleration, which is just Newton's 1st law, inertia). The illustration of a miring particle mass model shows a discrete number of 91 GeV mass particles surrounding the IR cutoff outer edge of the polarized vacuum around a fundamental particle core, giving mass. A PDF table comparing crude model mass estimates to observed masses of particles is linked here. There is evidence for the quantization of mass from the way the mathematics work for spin-1 quantum gravity. If you treat two masses being pushed together by spin-1 graviton exchanges with the isotropically distributed mass of the universe accelerating radially away from them (viewed in their reference frame), you get the expected correct a prediction of gravity as illustrated here. But if you do the same spin-1 quantum gravity analysis but only consider one mass and try to work out the acceleration field around it, as illustrated here, you get (using the empirically defensible black hole event horizon radius to calculate the graviton scatter cross-section) a prediction that gravitational force is proportional to mass2, which suggests all particles masses are built up from a single fixed size building block of mass. The identification of the number of mass particles to each fermion (fundamental particle) in the illustration and in the table here is by the analogy of nuclear magic numbers: in the shell model of the nucleus the exceptional stability of nuclei containing 2, 8, 20, 50 or 82 protons or 2, 8, 20, 50, 82 or 126 neutrons (or both), which are called 'magic numbers', is explained by the fact that these numbers represent successive completed (closed) shells of nucleons, by analogy to the shell structure of electrons in the atom. (Each nucleon has a set of four quantum numbers and obeys the exclusion principle in the nuclear structure, like electrons in the atom; the difference being that for orbital electrons there is generally no interaction of the orbital angular momentum and the spin angular momentum, whereas such an interaction does occur for nucleons in the nucleus.) Geometric factors like twice Pi appear to be obtained from spin considerations, as discussed in earlier blog posts, and they are common in quantum field theory. E.g., Schwinger's correction factor for Dirac's magnetic moment of the electron is 1 + (alpha)/(2*Pi).

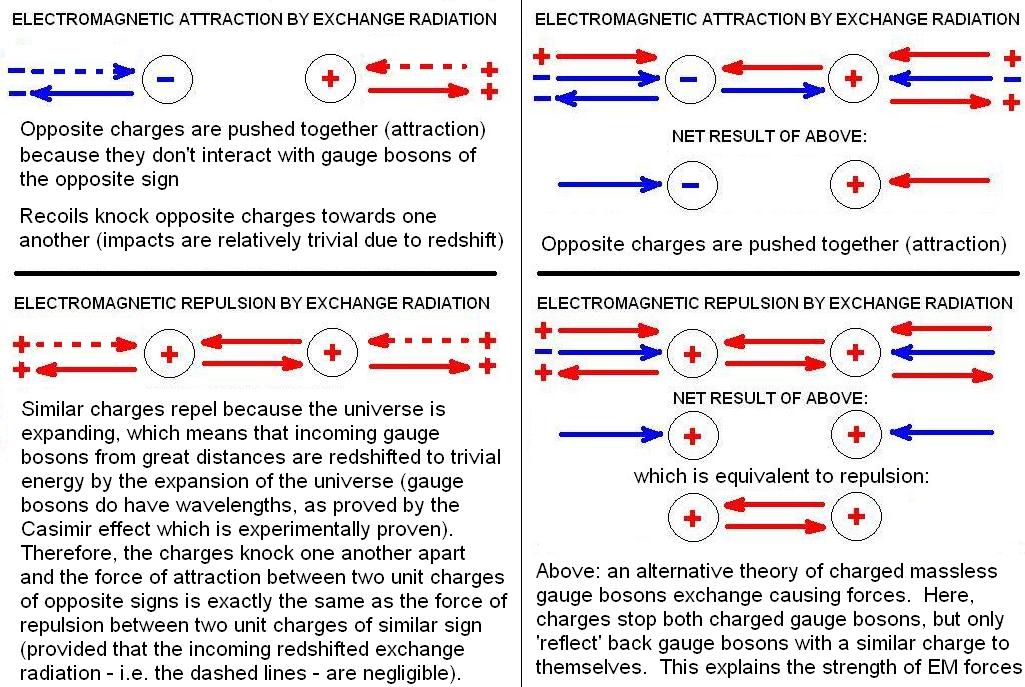

Above: the mechanism for electromagnetic forces explains physically how those force interactions occur by the exchange of gauge bosons (without invoking the magical '4-polarization photon'), allowing the understanding of fields and resolving anomalies in electromagnetism. The first evidence that the gauge bosons of electromagnetism are charged was the transmission line of electricity: both conductors propagate a charged field at the velocity of light for the surrounding insulator, as demonstrated on the blog page here. So in addition to the new quantum gravity model's ability to predict masses of fundamental particles and the coupling constant for gravity, it also deals with electromagnetism properly, showing why its force strength differs from gravity so much (electromagnetism theoretically involves a random walk between all charges in the universe, which makes it stronger than gravity by the square root of the number of fermions, 1080/2 = 1040).

Above: a random walk of charged massless electromagnetic gauge bosons between N = 1080 fermions in the universe would create a force that adds up to the square root of that number (N1/2 = 1040) multiplied by the force between just two particles, explaining why the force we calculate for quantum gravity between just two particles is smaller by a factor of 1040 than the force of electromagnetism! In other words, electromagnetism is much stronger than gravity because its path integral includes addictive contributions from all the charges in the universe (albeit with gross inefficiency due to the vector directions not adding up coherently, i.e. the random walk summation gives an effective sum of only 1040 not 1080 times gravity), whereas gravity carried by uncharged spin-1 gauge bosons does not involve force enhancing contributions from all the mass in the universe but just the line-of-sight shadowing of one mass by another.

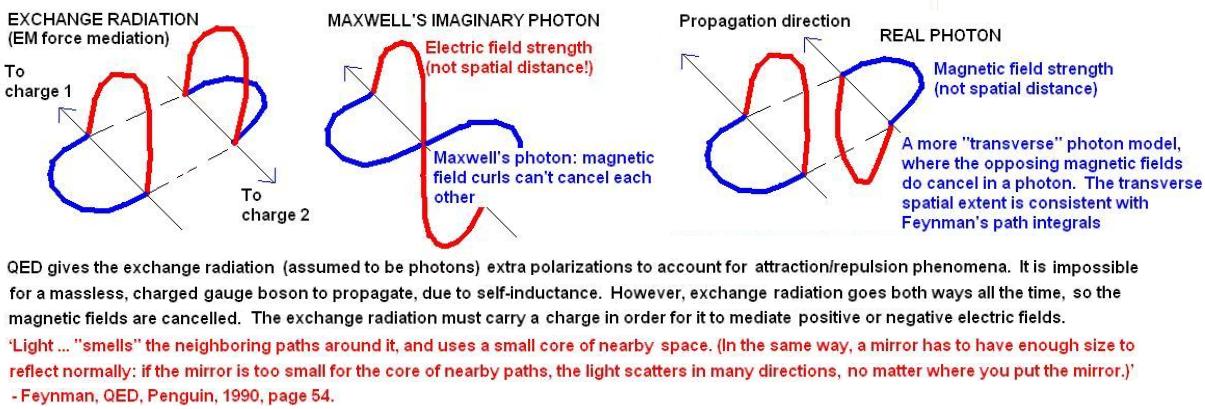

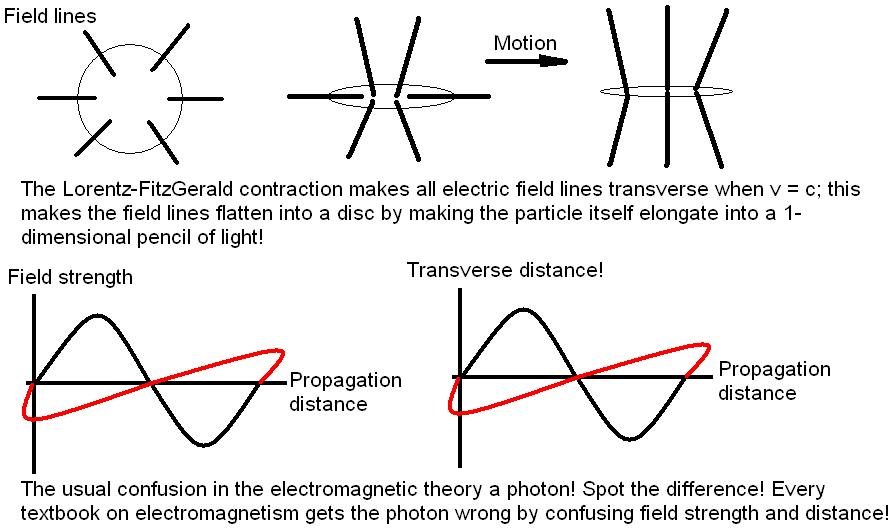

Above: photons, real and virtual, compared to Maxwell's photon illustration. The Maxwell model photon is always drawn as an electric and magnetic 'fields' both at right angles (orthagonal) to the direction of propagation; however this causes confusion because people assume that the 'fields' are directions, whereas they are actually field strengths. When you plot a graph of a field strength versus distance, the field strength doesn't indicate distance. It is true that a transverse wave like a photon has a transverse extent, but this is not indicated by a plot of E-field strength and B-field strength versus propagation distance! People get confused and think it is a three dimensional plot of a photon, when it is just a 1-dimensional plot and merely indicates how the magnetic field strength and electric field strength vary in the direction of propagation! Maxwell's theory is empty when you recognise this, because you are left with a 1-dimensional photon, not a truly transverse photon as observed. So we illustrate above how photons really propagate, using hard facts from the study of the propagation of light velocity logic signals by Heaviside and Catt, with corrections for their errors. The key thing is that massless charges won't propagate in a single direction only, because the magnetic fields it produces cause self-inductance which prevent motion. Massive charges overcome this by radiating electromagnetic waves as they accelerate, but massless charges will only propagate if there is an equal amount of charge flowing in the opposite direction at the same time so cancel out their magnetic field (because the magnetic fields curl around the direction of propagation, they cancel in this situation if the charges are similar). So we can deduce the mechanism of propagation of real photons and virtual (exchange) gauge bosons, and the mechanism is compatible with path integrals, the double slit diffraction experiment with single photons (the transverse extent of the photon must be bigger than the distance between slits for an interference pattern), etc.

Above: the incorporation of U(1) charge as mass (gravitational vacuum charge is quantized and always have identical mass to the Z0 as already shown) and mixed neutral U(1) x SU(2) gauge bosons as quantum spin-1 gravitons into the empirical, heuristically developed Standard Model of particle physics. The new model is illustrated on the left and the old Standard Model is illustrated on the right. The SU(3) colour charge theory for strong interactions and quark triplets (baryons) is totally unaltered. The electroweak U(1) x SU(2) symmetry is radically altered in interpretation but not in mathematical structure! The difference is that the massless charged SU(2) gauge bosons are assumed to all acquire mass in low energy physics low energy from some kind of unobserved ‘Higgs field’ (there are several models with differing numbers of Higgs bosons). This means that in the Standard Model, a ‘special’ 4-polarization photon mediates the electromagnetic interactions (requiring 4 polarizations so it mediate both positive and negative force fields around positive and negative charges, not merely the 2 polarizations we observe with photons!).

Correcting the Standard Model so that it deals with electromagnetism correctly and contains gravity simply requires the replacement of the Higgs field with one that only couples to one spin handedness of the electrically charged SU(2) bosons, giving them mass. The other handedness of electrically charged SU(2) bosons remain massless even at low energy and mediate electromagnetic interactions!

To understand how this works, notice that the weak force isospin charges of the weak bosons, such as W- and W+, is identical to their electric charges! Isospin is acquired when an electrically charged massless gauge boson (with no isotopic charge) acquires mass from the vacuum. The key difference between isotopic spin and electric charge is the massiveness of the gauge bosons, which alone determines whether the field obeys the Yang-Mills equation (where particle charge can be altered by the field) or the Maxwell equations (where a particle’s charge cannot be affected by the field). This is a result of magnetic self-inductance created by the motion of a charge:

(1) A massless electric charge can’t propagate in one direction by itself, because such motion of massless charge would cause infinite magnetic self-inductance that prevents motion. (Massless radiations can’t be accelerated because they only travel at the velocity of light, so unlike massive charged radiations, they cannot compensate for magnetic effects by radiating energy while being accelerated.) Therefore massless charged radiation cannot propagate to deliver charge to a particle! Massless charged radiation can only ever behave as ‘exchange radiation’, whereby there is an equal flux of charged massless radiation from charge A to B and simultaneously back from B to A, so that the opposite magnetic curls of each opposite-directed flux of exchange radiation oppose one another and cancel out, preventing the problem of magnetic self-inductance. In this situation, the charge of fermions remains constant and cannot ever vary, because the charge each fermion loses per second is equal to the amount of charge delivered to it by the equilibrium of exchange radiation. In other words, the energy delivered by the charged massless gauge bosons can vary (if charges move apart for instance, it can be redshifted), but the electric charge delivered is always in equilibrium with that emitted each second. Hence, the Yang-Mills equation is severely constrained in the case of electromagnetism and reduces to the Maxwell theory, as observed.

(2) Massive charged gauge boson can propagate by themselves in one direction because they can accelerate due to having mass which makes them move slower than light (if they were massless this would not be true, because a massless gauge boson goes at light velocity and cannot be accelerated to any higher velocity): this acceleration permits the charged massive particle to get around infinite magnetic self-inductance by radiating electromagnetic waves while accelerating. Therefore, massive charged gauge bosons can carry a net charge and can affect the charge of the fermions they interact with, which is why they obey the Yang-Mills equation not Maxwell’s.

Electromagnetism is described by SU(2) isospin with massless charged positive and negative gauge bosons

The usual argument against massless charged radiation propagating is infinite self-inductance, but as discussed in the blog page here this doesn't apply to virtual (gauge boson) exchange radiations, because the curls of magnetic fields around the portion of the radiation going from charge A to charge B is exactly cancelled out by the magnetic field curls from the radiation going the other way, from charge B to charge A. Hence, massless charged gauge bosons can propagate in space, provided they are being exchanged simultaneously in both directions between electric charges, and not just from one charge to another without a return current.

You really need electrically charged gauge bosons to describe electromagnetism, because the electric field between two electrons is different in nature to that between two positrons: so you can't describe this difference by postulating that both fields are mediated by the same neutral virtual photons, unless you grant the 2 additional polarizations of the virtual photon (the ordinary photon has only 2 polarizations, while the virtual photon must have 4) to be electric charge!

The virtual photon mediated between two electrons is negatively charged and that mediated between two positrons (or two protons) is positively charged. Only like charges can exchange virtual photons with one another, so two similar charges exchange virtual photons and are pushed apart, while opposite electric charges shield one another and are pushed together by a random-walk of charged virtual photons between the randomly distributed similar charges around the universe as explained in a previous post.

What is particularly neat having electrically charged electromagnetic virtual photons is that it automatically requires a SU(2) Yang-Mills theory! The mainstream U(1) Maxwellian electromagnetic gauge theory makes a change in the electromagnetic field induce a phase shift in the wave function of a charged particle, not in the electric charge of the particle! But with charged gauge bosons instead of neutral gauge bosons, the bosonic field is able to change the charge of a fermion just as the SU(2) charged weak bosons are able to change the isospin charges of fermions.

We don't see electromagnetic fields changing the electric charge of fermions normally because fermions radiate as much electric charge per second as they receive, from other charges, thereby maintaining an equilibrium. However, the electric field of a fermion is affected by its state of motion relative to an observer, when the electric field line distribution appears to make the electron "flatten" in the direction of motion due to Lorentz contraction at relativistic velocities. To summarize:

U(1) electromagnetism: is described by Maxwellian equations. The field is uncharged and so cannot carry charge to or from fermions. Changes in the field can only produce phase shifts in the wavefunction of a charged particle, such as acceleration of charges, and can never change the charge of a charged particle.

SU(2) electromagnetism (two charged massless gauge bosons): is described by the Yang-Mills equation because the field is electrically charged and can change not just the phase of the wavefunction of a charged particle to accelerate a charge, but can also in principle (although not in practice) change the electric charge of a fermion. This simplifies the Standard Model because SU(2) with two massive charged gauge bosons is already needed, and it naturally predicts (in the absence of a Higgs field without a chiral discrimination for left-handed spinors) the existence of massless uncharged versions of the these massive charged gauge bosons which were observed at CERN in 1983.

The Yang-Mills equation is used for any bosonic field which carries a charge and can therefore (in principle) change the charge of a fermion. The weak force SU(2) charge is isospin and the electrically charged massive weak charge gauge bosons carry an isospin charge which is IDENTICAL to the electric charge, while the massive neutral weak boson has zero electric charge and zero isospin charge. The Yang-Mills equation is:

dFmn/dxn + 2e(An x Fmn) + Jm = 0

which is similar to Maxwell's equations (Fmn is the field strength and Jm is the current), apart from the second term, 2e(An x Fmn), which describes the effect of the charged field upon itself (e is charge and An is the field potential). The term 2e(An x Fmn) doesn't appear in Maxwell's equations for two reasons:

(1) an exact symmetry between the rate of emission and reception of charged massless electromagnetic gauge bosons is forced by the fact that charged massless gauge bosons can only propagate in the vacuum where there is an equal return current coming from the other direction (otherwise they can't propagate, because charged massless radiation has infinite self-inductance due to the magnetic field produced, which is only cancelled out if there is an identical return current of charged gauge bosons, i.e. a perfect equilibrium or symmetry between the rates of emission and reception of charged massless gauge bosons by fermionic charges). This prevents fermionic charges from increasing or decreasing, because the rate of gain and rate of loss of charge per second is always the same.

(2) the symmetry between the number of positive and negative charges in the universe keeps electromagnetic field strengths low normally, so the self-interaction of the charge of the field with itself is minimal.

These two symmetries act together to prevents the Yang-Mills 2e(An x Fmn) term from having any observable effect in laboratory electromagnetism, which is why the mainstream empirical Maxwellian model works as a good approximation, despite being incorrect at a more fundamental physical level of understanding.

Quantum gravity is supposed to be similar to a Yang-Mills theory in regards to the fact that the energy of the gravitational field is supposed (in general relativity, which ignores vital quantum effects the mass-giving "Higgs field" or whatever and its interaction with gravitons) to be a source for gravity itself. In other words, like a Yang-Mills field, the gravitational field is supposed to generate a gravitational field simply by virtue of its energy, and therefore should interact with itself. If this simplistic idea from general relativity is true, then according to the theory presented on this blog page, the massless electrically neutral gauge boson of SU(2) is the spin-1 graviton. However, the structure of the Standard Model implies that some field is needed to provide mass even if the mainstream Higgs mechanism for electroweak symmetry breaking is wrong.

Therefore, the massless electrically neutral (photon-like) gauge boson of SU(2) may not be the graviton, but is instead an intermediary gauge boson which interacts in a simple way with massive (gravitational charge) particles in the vacuum: these massive (gravitational charge) particles may be described by the simple Abelian symmetry U(1). So U(1) then describes quantum gravity: it has one charge (mass) and one gauge boson (spin-1 graviton).

'Yet there are new things to discover, if we have the courage and dedication (and money!) to press onwards. Our dream is nothing else than the disproof of the standard model and its replacement by a new and better theory. We continue, as we have always done, to search for a deeper understanding of nature's mystery: to learn what matter is, how it behaves at the most fundamental level, and how the laws we discover can explain the birth of the universe in the primordial big bang.' - Sheldon L. Glashow, The Charm of Physics, American Institute of Physics, New York, 1991. (Quoted by E. Harrison, Cosmology, Cambridge University press, London, 2nd ed., 2000, p. 428.)

Conventionally U(1) represents weak hypercharge and SU(2) the weak interaction, with the unobserved B gauge boson of U(1) 'mixing' (according to the Glashow/Weinberg mixing angle) with the W0 unobserved gauge boson of SU(2) to produce the observed electromagnetic photon, and the observed weak neutral current gauge boson, the Z0. (It's not specified in the Standard Model whether this mixing is supposed to occur before or after the weak gauge bosons have actually acquired mass from the speculative Higgs field. The Higgs field is a misnomer since there isn't one specific theory but various speculations, including contradictory theories with differing numbers of 'Higgs bosons', none of which have been observed. Woit mentions in Not Even Wrong that since Weinberg put a Higgs's mechanism into the electroweak theory in 1967, the Higgs theory is called 'Weinberg's toilet' by Glashow - Woit’s undergraduate adviser - because, although a mass-giving field is needed to give mass to weak bosons and thus break electroweak symmetry into separate electromagnetic and weak forces at low energy, Higgs' theories stink.)

In the empirical model we will describe in this post, U(1) is still weak hypercharge but SU(2) without mass is electromagnetism (with charged massless gauge bosons, positive for positive electric fields and negative for negative electric fields) and left-handed isospin charge (forming quark doublets, mesons). The Glashow/Weinberg mixing remains, but the massless electrically neutral product is the graviton, an unobservably high-energy photon whose wavelength is so small that it only interacts with the tiny black hole-sized cores of the gravitational charges (masses) of fundamental particles. This has the advantage of making U(1) x SU(2) x SU(3) a theory of all interactions, without changing the experimentally-confirmed mathematical structure significantly (we will show below how the Yang-Mills equation reduces to the Maxwell equations for charged massless gauge bosons). The addition of mass to the half of the electromagnetic gauge bosons gives them their left-handed weak isospin charge so they interact only with left-handed spinors. The other features of the weak interaction (apart from just acting on left-handed spinors) such as the weak interaction strength and the short range, are also due to the massiveness of the weak gauge bosons.

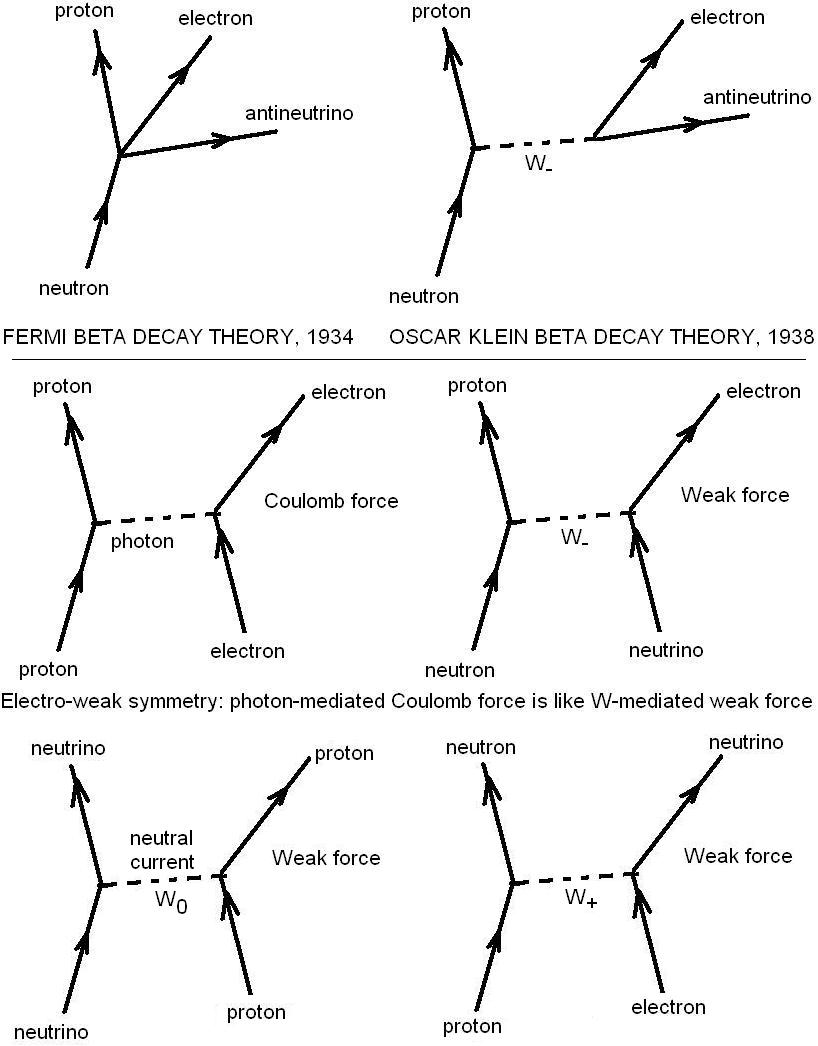

Beta radioactivity, controlled by the weak force, is the process whereby neutrons decay into protons, electrons and antineutrinos by a downquark decaying into an upquark by emitting a W- weak boson which they decays into an electron and an antineutrino.

This weak interaction is asymmetric due to the massive gauge bosons: free protons can't ever decay by an upquark transforming into a downquark through emitting a W+ weak boson which then decays into a neutrino and a positron. The reason? Violation of mass-energy conservation! The decay of free protons into neutrons is banned because neutrons are heavier than protons, and mass-energy is conserved. (Protons bound in nuclei get extra effective mass from the binding energy of the strong force field in the nucleus, so in some cases - such as radioactive carbon-11 which is used in PET scanners - protons decay into neutrons by emitting a positive weak gauge boson which decays into a positron and a neutrino.) The left-handedness of the weak interaction is produced by the coupling of the gauge bosons to massive vacuum charges. The short-range, strength and the left-handedness of weak interactions are all due to the effect of mass on electromagnetic gauge bosons and charges. Mass limits the range and strength of the weak interaction and it prevents right-handed spinors undergoing weak interactions. The whole point about electroweak theory is that the electromagnetic and weak interactions are identical in strength and nature apart from the effect that the weak gauge bosons are massive. Whereas the electromagnetic force charge for beta decay is {alpha} ~ 1/137.036..., the corresponding weak force charge for low energies (proton-sized distances) is {alpha}*(Mproton/MW)2, so that it depends on the square of the ratio of the mass of the proton (the decay product in beta decay) to the mass of the weak gauge boson involved, MW. Since Mproton ~ 1 GeV and MW ~ 80 GeV, the low-energy weak force charge is on the order of 1/802 of the electromagnetic charge, alpha. It is the fact that the weak interaction involves massive virtual photons, with over 80 times the mass of the decay products (!), which cause it to be so much weaker than electromagnetism at low energies (nuclear-sized distances). Neglecting the effects of mass on the interaction strength and range of the weak force, it is the same thing as electromagnetism. At very high energies exceeding 100 GeV (short distances, less than 10-18 metre), the massive weak gauge bosons can be exchanged without the distance being an issue, and the weak force is then similar in strength to the electromagnetic force! The weak and strong forces can only act over a maximum distance of about 1 Fermi (10-15 metre) due to the limited range of the gauge bosons (massive W's for the weak interaction, and pions for the longest-range residual component of the strong colour force).

Electromagnetic and strong forces conserve the number of interacting fermions, so that the number of fermion reactants is the same as the number of fermion products, but the weak force allows the number of fermion products to differ from the number of fermion reactants. The weak force involves neutrinos which have weak charges, little mass and no electromagnetic or strong charge, so they are weakly interacting (very penetrating).

Above: beta decay is controlled by the weak force which is similar to the electromagnetic interaction (on a Feynman diagram, an ingoing neutrino is equivalent to having an antineutrino as an interaction product). In place of electromagnetic photons mediating particle interaction, there are three weak gauge bosons. If these weak gauge bosons were massless, the strength of the weak interaction would be the same as the electromagnetic interaction. However, the weak gauge bosons are massive, and that makes the weak interaction much weaker than electromagnetism at low energies (i.e. relatively big, nucleon-sized distances). This is because the massive virtual weak gauge bosons are short-ranged dut to their massiveness (they suffer rapid attenuation with distance in the quantum field vacuum), so the weak boson interaction rate drops sharply with increasing distance. Hence, by analogy to Yukawa's famous 1935 theoretical prediction of the pion mass using the experimentally known radius of pion-mediated nuclear interactions, it was possible to predict that the mass of the weak gauge bosons using Glashow's theory of weak interactions and the experimentally known weak interaction strength, giving was 82 (W- and W+) and 92 GeV (Z0), predictions which were closely confirmed by the 27-km diameter LEP (large electron-positron) collider experiments announced at CERN on 21 January 1983. (The masses are now established to be 80.4 and 91.2 GeV respectively.) Neutral currents due to exchange of electrically neutral massive W0 or Z0 (as it is known after it has undergone Weinberg-Glashow mixing with the photon in electroweak theory) gauge bosons had already been confirmed experimentally in 1973, leading to the award of the 1979 Nobel Prize to Glashow, Salam and Weinberg for the SU(2) weak gauge boson theory (Glashow's work of 1961 had been extended by Weinberg and Salam in 1967). (No Nobel Prize has been awarded for the entire electroweak theory because nobody has detected the speculative Higgs field boson(s) postulated to give mass and thus electroweak symmetry breaking in the mainstream electroweak theory.) One neutral current interaction is illustrated above. However, other Z0 neutral currents exist and are very similar to electromagnetic interactions, e.g. the Z0 can mediate electron scattering, although at low energies this process will be trivial in comparison to electromagnetic (Coulomb) scattering on account of the mass of the Z0 which makes the massive neutral current interaction weak and trivial compared to electromagnetism at low energies (i.e. large distances).

Above: beta decay is controlled by the weak force which is similar to the electromagnetic interaction (on a Feynman diagram, an ingoing neutrino is equivalent to having an antineutrino as an interaction product). In place of electromagnetic photons mediating particle interaction, there are three weak gauge bosons. If these weak gauge bosons were massless, the strength of the weak interaction would be the same as the electromagnetic interaction. However, the weak gauge bosons are massive, and that makes the weak interaction much weaker than electromagnetism at low energies (i.e. relatively big, nucleon-sized distances). This is because the massive virtual weak gauge bosons are short-ranged dut to their massiveness (they suffer rapid attenuation with distance in the quantum field vacuum), so the weak boson interaction rate drops sharply with increasing distance. Hence, by analogy to Yukawa's famous 1935 theoretical prediction of the pion mass using the experimentally known radius of pion-mediated nuclear interactions, it was possible to predict that the mass of the weak gauge bosons using Glashow's theory of weak interactions and the experimentally known weak interaction strength, giving was 82 (W- and W+) and 92 GeV (Z0), predictions which were closely confirmed by the 27-km diameter LEP (large electron-positron) collider experiments announced at CERN on 21 January 1983. (The masses are now established to be 80.4 and 91.2 GeV respectively.) Neutral currents due to exchange of electrically neutral massive W0 or Z0 (as it is known after it has undergone Weinberg-Glashow mixing with the photon in electroweak theory) gauge bosons had already been confirmed experimentally in 1973, leading to the award of the 1979 Nobel Prize to Glashow, Salam and Weinberg for the SU(2) weak gauge boson theory (Glashow's work of 1961 had been extended by Weinberg and Salam in 1967). (No Nobel Prize has been awarded for the entire electroweak theory because nobody has detected the speculative Higgs field boson(s) postulated to give mass and thus electroweak symmetry breaking in the mainstream electroweak theory.) One neutral current interaction is illustrated above. However, other Z0 neutral currents exist and are very similar to electromagnetic interactions, e.g. the Z0 can mediate electron scattering, although at low energies this process will be trivial in comparison to electromagnetic (Coulomb) scattering on account of the mass of the Z0 which makes the massive neutral current interaction weak and trivial compared to electromagnetism at low energies (i.e. large distances).How this gravity mechanism updates the Standard Model of particle physics

'The electron and antineutrino [both emitted in beta decay of neutron to proton as argued by Pauli in 1930 from energy conservation using the experimental data on beta energy spectra; the mean beta energy is only 30% of the energy lost in beta decay so 70% on average must be in antineutrinos] each have a spin 1/2 and so their combination can have spin total 0 or 1. The photon, by contrast, has spin 1. By analogy with electromagnetism, Fermi had (correctly) supposed that only the spin 1 combination emerged in the weak decay. To further the analogy, in 1938, Oscar Klein suggested that a spin 1 particle ('W boson') mediated the decay, this boson playing a role in weak interactions like that of the photon in the electromagnetic case [electron-proton scattering is mediated by virtual photons, and is analogous to the W-mediated 'scattering' interaction between a neutron and a neutrino (not antineutrino) that results in a proton and an electron/beta particle; since an incoming (reactant) neutrino has the same effect on a reaction as a released (resultant) antineutrino, this process W-mediated scattering is equivalent to the beta decay of a neutron].

'In 1957, Julian Schwinger extended these ideas and attempted to build a unified model of weak and electromagnetic forces by taking Klein's model and exploiting an analogy between it and Yukawa's model of nuclear forces [where pion exchange between nucleons causes the attractive component of the strong interaction, binding the nucleons into the nucleus against the repulsive electric force between protons]. As the pion+, pion-, and pion0 are exchanged between interacting particles in Yukawa's model of the nuclear force, so might the W+, W-, and [W0] photon be in the weak and electromagnetic forces.

'However, the analogy is not perfect ... the weak and electromagnetic forces are very sensitive to electrical charge: the forces mediated by W+ and W- appear to be more feeble than the electromagnetic force.' - Professor Frank Close, The New Cosmic Onion, Taylor and Francis, New York, 2007, pp. 108-9.

The Yang-Mills SU(2) gauge theory of 1954 was first (incorrectly but interestingly) applied to weak interactions by Schwinger and Glashow in 1956, as Glashow explains in his Nobel prize award lecture:

‘Schwinger, as early as 1956, believed that the weak and electromagnetic interactions should be combined into a gauge theory. The charged massive vector intermediary and the massless photon were to be the gauge mesons. As his student, I accepted his faith. ... We used the original SU(2) gauge interaction of Yang and Mills. Things had to be arranged so that the charged current, but not the neutral (electromagnetic) current, would violate parity and strangeness. Such a theory is technically possible to construct, but it is both ugly and experimentally false [H. Georgi and S. L. Glashow, Physical Review Letters, 28, 1494 (1972)]. We know now that neutral currents do exist and that the electroweak gauge group must be larger than SU(2).

‘Another electroweak synthesis without neutral currents was put forward by Salam and Ward in 1959. Again, they failed to see how to incorporate the experimental fact of parity violation. Incidentally, in a continuation of their work in 1961, they suggested a gauge theory of strong, weak and electromagnetic interactions based on the local symmetry group SU(2) x SU(2) [A. Salam and J. Ward, Nuovo Cimento, 19, 165 (1961)]. This was a remarkable portent of the SU(3) x SU(2) x U(1) model which is accepted today.

‘We come to my own work done in Copenhagen in 1960, and done independently by Salam and Ward. We finally saw that a gauge group larger than SU(2) was necessary to describe the electroweak interactions. Salam and Ward were motivated by the compelling beauty of gauge theory. I thought I saw a way to a renormalizable scheme. I was led to SU(2) x U(1) by analogy with the appropriate isospin-hypercharge group which characterizes strong interactions. In this model there were two electrically neutral intermediaries: the massless photon and a massive neutral vector meson which I called B but which is now known as Z. The weak mixing angle determined to what linear combination of SU(2) x U(1) generators B would correspond. The precise form of the predicted neutral-current interaction has been verified by recent experimental data. …’

Glashow in 1961 published an SU(2) model which had three weak gauge bosons, the neutral one of which could mix with the photon of electromagnetism to produce the observed neutral gauge bosons of electroweak interactions. (For some reason, Glashow's weak mixing angle is now called Weinberg's mixing angle.) Glashow's theory predicted massless weak gauge bosons, not massive ones.

For this reason, a mass-giving field suggested by Peter Higgs in 1964 was incorporated into Glashow's model by Weinberg as a mass-giving and symmetry-breaking mechanism (Woit points out in his book Not Even Wrong that this Higgs field is known as 'Weinberg's toilet' because it was a vauge theory which could exist in several forms with varying numbers of speculative 'Higgs bosons', and it couldn't predict the exact mass of a Higgs boson).

I've explained in a previous post, here, where we depart from Glashow's argument: Glashow and Schwinger in 1956 investigated SU(2) using for the 3 gauge bosons 2 massive weak gauge bosons and 1 uncharged electromagnetic massless gauge boson. This theory failed to include the massive uncharged Z gauge boson that produces neutral currents when exchanged. Because this specific SU(2) electro-weak theory is wrong, Glashow claimed that SU(2) is not big enough to include both weak and electromagnetic interactions!

However, this is an arm-waving dismissal and ignores a vital and obvious fact: SU(2) has 3 vector bosons but you need to supply mass to them by an external field (the Standard Model does this with some kind of speculative Higgs field, so far unverified by experiment). Without that (speculative) field, they are massless. So in effect SU(2) produces not 3 but 6 possible different gauge bosons: 3 massless gauge bosons with long range, and 3 massive ones with short range which describe the left-handed weak interaction.

It is purely the assumed nature of the unobserved, speculative Higgs field which tries to get rid of the 3 massless versions of the weak field quanta! If you replace the unobserved Higgs mass mechanism with another mass mechanism which makes checkable predictions about particle masses, you then arrive at an SU(2) symmetry with in effect 2 versions (massive and massless) of the 3 gauge bosons of SU(2), and the massless versions of those will give rise to long-ranged gravitational and electromagnetic interactions. This reduces the Standard Model from U(1) x SU(2) x SU(3) to just SU(2) x SU(3), while incorporating gravity as the massless uncharged gauge boson of SU(2). I found the idea that that chiral symmetry features of the weak interaction connects with electroweak symmetry breaking in Dr Peter Woit's 21 March 2004 'Not Even Wrong' blog posting The Holy Grail of Physics:

'An idea I’ve always found appealing is that this spontaneous gauge symmetry breaking is somehow related to the other mysterious aspect of electroweak gauge symmetry: its chiral nature. SU(2) gauge fields couple only to left-handed spinors, not right-handed ones. In the standard view of the symmetries of nature, this is very weird. The SU(2) gauge symmetry is supposed to be a purely internal symmetry, having nothing to do with space-time symmetries, but left and right-handed spinors are distinguished purely by their behavior under a space-time symmetry, Lorentz symmetry. So SU(2) gauge symmetry is not only spontaneously broken, but also somehow knows about the subtle spin geometry of space-time. Surely there’s a connection here… So, this is my candidate for the Holy Grail of Physics, together with a guess as to which direction to go looking for it.'

As discussed in previous blog posts, e.g. this, the fact that the weak force is left-handed (affects only particles with left-handed spin) arises from the coupling of massive bosons in the vacuum to the weak gauge bosons: this coupling of massive bosons to the weak gauge bosons prevents them from interacting with particles with right-handed spin. The massless versions of the 3 SU(2) gauge bosons don't get this spinor discrimination because they don't couple with massive vacuum bosons, so the massless 3 SU(2) gauge bosons (which give us electromagnetism and gravity) are not limited to interacting with just one handedness of particles in the universe, but equally affect left- and right-handed particles. Further research on this topic is a underway. The 'photon' of U(1) is mixed via the Weinberg mixing angle in the standard model with the electrically neutral gauge boson of SU(2), and in any case it doesn't describe electromagnetism without postulating unphysically that positrons are electrons 'going backwards in time'; however this kind of objection is an issue you will get with new theories due to problems in the bedrock assumptions of the subject and so such issues should not be used as an excuse to censor the new idea out; in this case the problem is resolved either by Feynman's speculative time argument - speculative because there is no evidence that positive charges are negative charges going back in time! - or as suggested on this blog, by dumping U(1) symmetry for electrodynamics and adopting instead SU(2) for electrodynamics without the Higgs field, which then allows two charges - positive and negative without one going backwards in time, and three massless gauge bosons and can therefore incorporate gravitation with electrodynamics. Evidence from electromagnetism:

‘I am a physicist and throughout my career have been involved with issues in the reliability of digital hardware and software. In the late 1970s I was working with CAM Consultants on the reliability of fast computer hardware. At that time we realised that interference problems – generally known as electromagnetic compatibility (emc) – were very poorly understood.’

– Dr David S. Walton, co-discoverer in 1976 (with Catt and Malcolm Davidson) that the charging and discharging of capacitors can be treated as the charging and discharging of open ended power transmission lines. This is a discovery with a major but neglected implication for the interpretation of Maxwell's classical electromagnetism equations in quantum field theory; because energy flows into a capacitor or transmission line at light velocity and is then trapped in it with no way to slow down - the magnetic fields cancel out when energy is trapped - charged fields propagating at the velocity of light constitute the observable nature of apparently 'static' charge and therefore electromagnetic gauge bosons of electric force fields are not neutral but carry net positive and negative electric charges. Electronics World, July 1995, page 594.

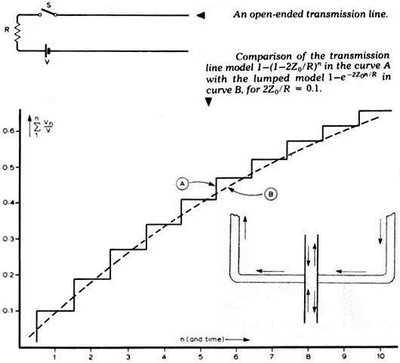

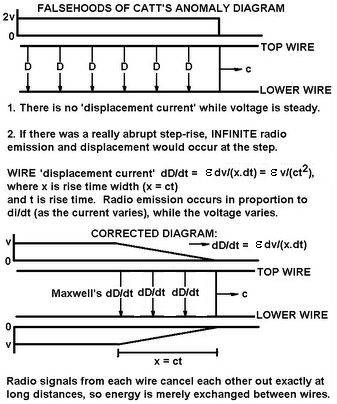

Above: the Catt-Davidson-Walton theory showed that the transmission line section as capacitor could be modelled by the Heaviside theory of a light-velocity logic pulse. The capacitor charges up in a lot of small steps as voltage flows in, bounces off the open circuit at the far end of the capacitor, and then reflects and adds to further incoming energy current. The steps are approximated by the classical theory of Maxwell, which gives the exponential curve. Unfortunately, Heaviside's mathematical theory is an over-simplification (wrong physically, although for most purposes it gives approximately valid results numerically) because it assumes that at the front of a logic step (Heaviside signalled using Morse code in 1875 in the undersea cable between Newcastle and Denmark) the rise is a discontinuous or abrupt step, instead of a gradual rise! We know this is wrong because at the front of a logic step the gradual rise in electric field strength with distance is what causes conduction electrons to accelerate to drift velocity from the normal randomly directed thermal motion they have.

Above: some of the errors in Heaviside's theory are inherited by Catt in his theoetical work and in his so-called "Catt Anomaly" or "Catt Question". If you look logically at Catt's original anomaly diagram (based on Heaviside's theory), you can see that no electric current can occur: electric current is caused by the drift of electrons which is due to the change of voltage with distance along a conductor. E.g. if I have a conductor uniformly charged to 5 volts with respect to another conductor, no electric current flows because there is simply no voltage gradient to cause a current. If you want an electric current, connect one end of a conductor to say 5 volts and the other end to some different potential, say 0 volts. Then there is a gradient of 5 volts along the length of the conductor, which accelerates electrons up to drift velocity for the resistance. if you connect both ends of a conductor to the same 5 volts potential, there is no gradient in the voltage along the conductor so there is no net electromotive force on the electrons. The vertical front on Catt's original Heaviside diagram depiction of the "Catt Anomaly" doesn't accelerate electrons in the way that we need because it shows an instantaneous rise in volts, not a gradient with distance.

Once you correct some of the Heaviside-Catt errors by including a real (ramping) rise time at the front of the electric current, the physics at once becomes clear and you can see what is actually occurring. The acceleration of electrons in the ramps of each conductors generates a radiated electromagnetic (radio) signal which propagates transversely to the other conductor. Since each conductor radiates an exactly inverted image of the radio signal from the other conductor, both superimposed radio signals exactly cancel when measured from a large distance compared to the distance between the two conductors. This is perfect interference, and prevents any escape of radiowave energy in this mechanism. The radiowave energy is simply exchanged between the ramps of the logic signals in each of the two conductors of the transmission line. This is the mechanism for electric current flow at light velocity via power transmission lines: what Maxwell attributed to ‘displacement current’ of virtual charges in a mechanical vacuum is actually just exchange of radiation!

There are therefore three related radiations flowing in electricity: surrounding one conductor there are positively-charged massless electromagnetic gauge bosons flowing parallel to the conductor at light velocity (to produce the positive electric field around that conductor), around the other there are negatively-charged massless gauge bosons going in the same direction again parallel to the conductor, and between the two conductors the accelerating electrons exchange normal radiowaves which flow in a direction perpendicular to the conductors and have the role which is mathematically represented by Maxwell's 'displacement current' term (enabling continuity of electric current in open circuits, i.e. circuits containing capacitors with a vacuum dielectric that prevents stops real electric current flowing, or long open-ended transmission lines which allow electric current to flow while charging up, despite not being a completed circuit).

Commenting on the mainstream focus upon string theory, Dr Woit states (http://arxiv.org/abs/hep-th/0206135 page 52):

'It is a striking fact that there is absolutely no evidence whatsoever for this complex and unattractive conjectural theory. There is not even a serious proposal for what the dynamics of the fundamental “M-theory” is supposed to be or any reason at all to believe that its dynamics would produce a vacuum state with the desired properties. The sole argument generally given to justify this picture of the world is that perturbative string theories have a massless spin two mode and thus could provide an explanation of gravity, if one ever managed to find an underlying theory for which perturbative string theory is the perturbation expansion. This whole situation is reminiscent of what happened in particle theory during the 1960’s, when quantum field theory was largely abandoned in favor of what was a precursor of string theory. The discovery of asymptotic freedom in 1973 brought an end to that version of the string enterprise and it seems likely that history will repeat itself when sooner or later some way will be found to understand the gravitational degrees of freedom within quantum field theory. While the difficulties one runs into in trying to quantize gravity in the standard way are well-known, there is certainly nothing like a no-go theorem indicating that it is impossible to find a quantum field theory that has a sensible short distance limit and whose effective action for the metric degrees of freedom is dominated by the Einstein action in the low energy limit. Since the advent of string theory, there has been relatively little work on this problem, partly because it is unclear what the use would be of a consistent quantum field theory of gravity that treats the gravitational degrees of freedom in a completely independent way from the standard model degrees of freedom. One motivation for the ideas discussed here is that they may show how to think of the standard model gauge symmetries and the geometry of space-time within one geometrical framework.'

That last sentence is the key idea that gravity should be part of the gauge symmetries of the universe, not left out as it is in the mainstream 'standard model', U(1) x SU(2) x SU(3).

How the pressure mechanism of quantum gravity reproduces the contraction in general relativity

As long ago as 1949 a Dirac sea was shown to mimic the relativity contraction and mass-energy:

‘It is shown that when a Burgers screw dislocation [in a crystal] moves with velocity v it suffers a longitudinal contraction by the factor (1 – v2/c2)1/2, where c is the velocity of transverse sound. The total energy of the moving dislocation is given by the formula E = Eo/(1 – v2/c2)1/2, where E0 is the potential energy of the dislocation at rest.’ - C. F. Frank, ‘On the equations of motion of crystal dislocations’, Proceedings of the Physical Society of London, vol. A62 (1949), pp. 131-4.

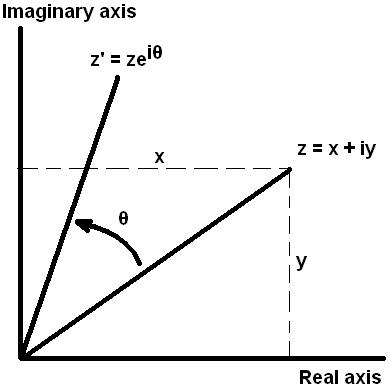

Feynman explained that the contraction of space around a static mass M due to curvature in general relativity is a reduction in radius by (1/3)MG/c2 which is 1.5 mm for the Earth. You don’t need the tensor machinery of general relativity to get such simple results for the low energy (classical) limit. (Baez and Bunn similarly have a derivation of Newton’s law from general relativity, that doesn’t use tensor analysis: see http://math.ucr.edu/home/baez/einstein/node6a.html.) We can do it just using the equivalence principle of general relativity plus some physical insight:

The velocity needed to escape from the gravitational field of a mass M (ignoring atmospheric drag), beginning at distance x from the centre of the mass is by Newton’s law v = (2GM/x)1/2, so v2 = 2GM/x. The situation is symmetrical; ignoring atmospheric drag, the speed that a ball falls back and hits you is equal to the speed with which you threw it upwards. This is just a simple result of the conservation of energy! Therefore, the gravitational potential energy of mass in a gravitational field at radius x from the centre of mass is equivalent to the energy of an object falling down to that distance from an infinite distance, and this gravitational potential energy is - by the conservation of energy - equal to the kinetic energy of a mass travelling with escape velocity v.

By Einstein’s principle of equivalence between inertial and gravitational mass, the effects of the gravitational acceleration field are identical to other accelerations such as produced by rockets and elevators. Therefore, we can place the square of escape velocity (v2 = 2GM/x) into the Fitzgerald-Lorentz contraction, giving g = (1 – v2/c2)1/2 = [1 – 2GM/(xc2)]1/2

However, there is an important difference between this gravitational transformation and the usual Fitzgerald-Lorentz transformation, since length is only contracted in one dimension with velocity, whereas length is contracted equally in 3 dimensions (in other words, radially outward in 3 dimensions, not sideways between radial lines!), with spherically symmetric gravity. Using the binomial expansion to the first two terms of each:

Fitzgerald-Lorentz contraction effect: g = x/x0 = t/t0 = m0/m = (1 – v2/c2)1/2 = 1 – ½v2/c2 + …

Gravitational contraction effect: g = x/x0 = t/t0 = m0/m = [1 – 2GM/(xc2)]1/2 = 1 – GM/(xc2) + …,

where for radial symmetry (x = y = z = r), we have the contraction spread over three perpendicular dimensions not just one as is the case for the FitzGerald-Lorentz contraction: x/x0 + y/y0 + z/z0 = 3r/r0. Hence the relative radial contraction of space around a mass is r/r0 = 1 – GM/(xc2) = 1 – GM/[(3rc2]

Therefore, clocks slow down not only when moving at high velocity, but also in gravitational fields, and distance contracts in all directions toward the centre of a static mass. The variation in mass with location within a gravitational field shown in the equation above is due to variations in gravitational potential energy. Space is contracted radially around mass M by the distance (1/3)GM/c2.

It is not contracted in the transverse direction, i.e. along the circumference of the Earth (the direction at right angles to the radial lines which originate from the centre of mass). This is the physical explanation in quantum gravity of so-called curved spacetime: because graviton exchange compresses masses radially but leaves them unaffected transversely, radius is reduced but circumference is unaffected so the value of Pi (circumference/diameter of a circle) would be altered for a massive circular object if we use Euclidean 3-dimensional geometry! General relativity's spacetime is a system for keeping Pi from changing by simply invoking an extra dimension: by treating time as a spatial dimension, Euclidean 3-dimensional space can be treated as a surface on 4-dimensional spacetime, with the curvature ensuring that Pi is never altered! Spacetime curves due to the extra dimension instead of Pi altering. However, this is a speculative explanation and there is no proof that contraction effects are really due to this curvature. For example, Lunsford published a unification of electrodynamics and general relativity which 6 dimensions including 3 time-like dimensions, so that there is a perfect symmetry between space and time, with each spatial dimension having a corresponding time dimension. This makes sense when you measure time from the big bang by means of the t = 1/H where H is the Hubble parameter H = v/x: because there are 3 spatial dimensions, the expansion rate measured in each of those three spatial dimensions will give you 3 separate ages of the universe, i.e. 3 time dimensions (unless the expansion is isotropic, when all three times are indistinguishable, as appears to be the case!). (As with a paper of mine, Lunsford's paper was censored off arXiv after being published in a peer-reviewed physics journal under the title, “Gravitation and Electrodynamics over SO(3,3)”, International Journal of Theoretical Physics, Volume 43, Number 1 / January, 2004, Pages 161-177, because it disagrees with the number of speculative unobserved dimensions in arXiv endorsed mainstream string theory sh*t: it can be downloaded here. Therefore when 'string theorists' claim that there is 'no alternative' to their brilliant sh*t landscape of 10500 metastable vacua solutions from all the combinations of 6 unobservable, compactified Calabi-Yau extra spatial dimensions in 10 dimensional string, tell them and their stupid arXiv censors to go and f*ck off with their extra spatial dimensions insanity.)